Unsupervised Discovery of Object Landmarks via Contrastive Learning

Paper and Code

Jun 26, 2020

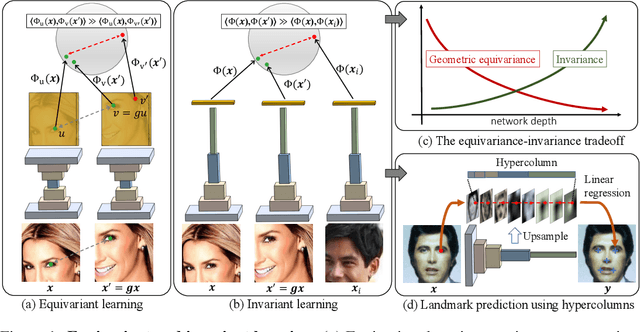

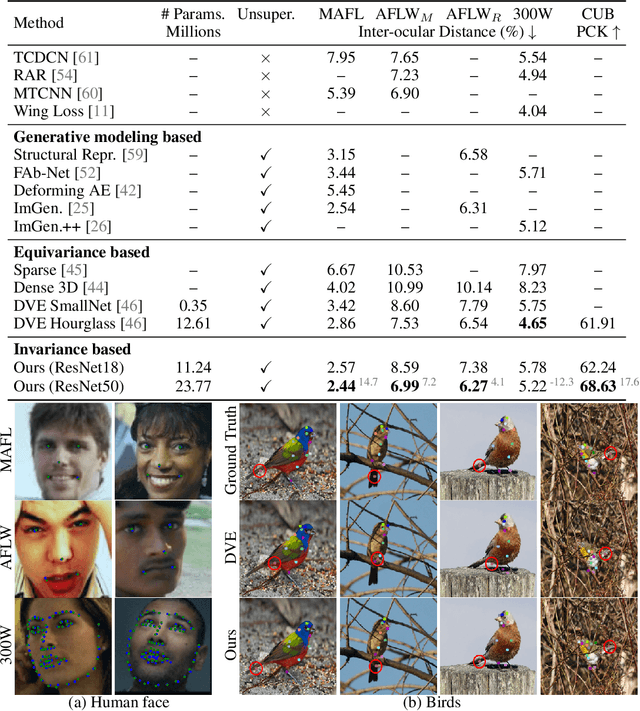

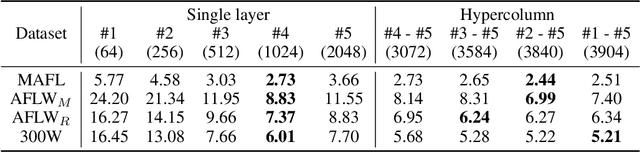

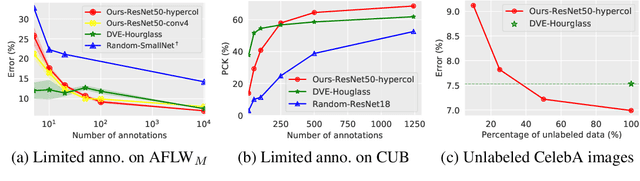

Given a collection of images, humans are able to discover landmarks of the depicted objects by modeling the shared geometric structure across instances. This idea of geometric equivariance has been widely used for unsupervised discovery of object landmark representations. In this paper, we develop a simple and effective approach based on contrastive learning of invariant representations. We show that when a deep network is trained to be invariant to geometric and photometric transformations, representations from its intermediate layers are highly predictive of object landmarks. Furthermore, by stacking representations across layers in a hypercolumn their effectiveness can be improved. Our approach is motivated by the phenomenon of the gradual emergence of invariance in the representation hierarchy of a deep network. We also present a unified view of existing equivariant and invariant representation learning approaches through the lens of contrastive learning, shedding light on the nature of invariances learned. Experiments on standard benchmarks for landmark discovery, as well as a challenging one we propose, show that the proposed approach surpasses prior state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge