Unsupervised Adversarial Image Inpainting

Paper and Code

Dec 18, 2019

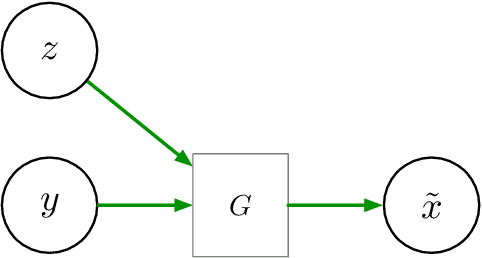

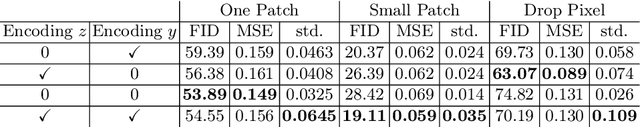

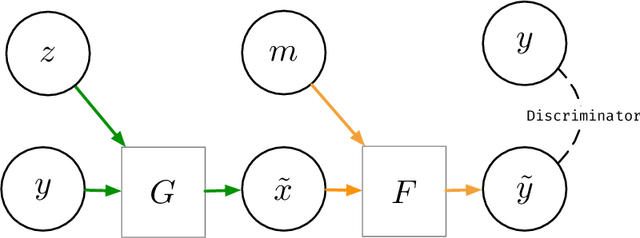

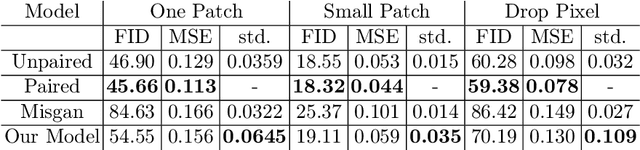

We consider inpainting in an unsupervised setting where there is neither access to paired nor unpaired training data. The only available information is provided by the uncomplete observations and the inpainting process statistics. In this context, an observation should give rise to several plausible reconstructions which amounts at learning a distribution over the space of reconstructed images. We model the reconstruction process by using a conditional GAN with constraints on the stochastic component that introduce an explicit dependency between this component and the generated output. This allows us sampling from the latent component in order to generate a distribution of images associated to an observation. We demonstrate the capacity of our model on several image datasets: faces (CelebA), food images (Recipe-1M) and bedrooms (LSUN Bedrooms) with different types of imputation masks. The approach yields comparable performance to model variants trained with additional supervision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge