Unpaired High-Resolution and Scalable Style Transfer Using Generative Adversarial Networks

Paper and Code

Oct 10, 2018

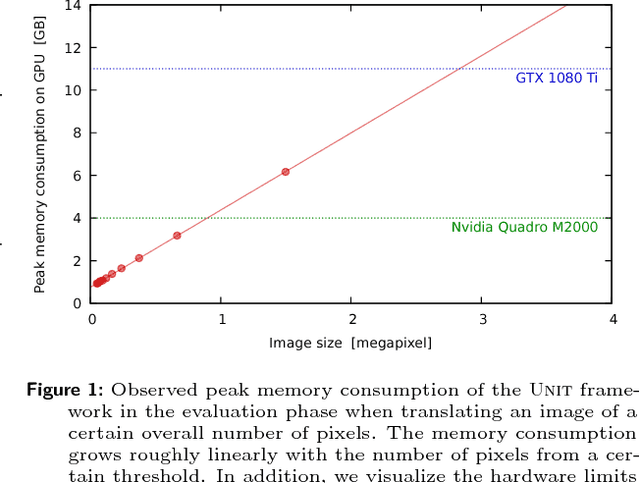

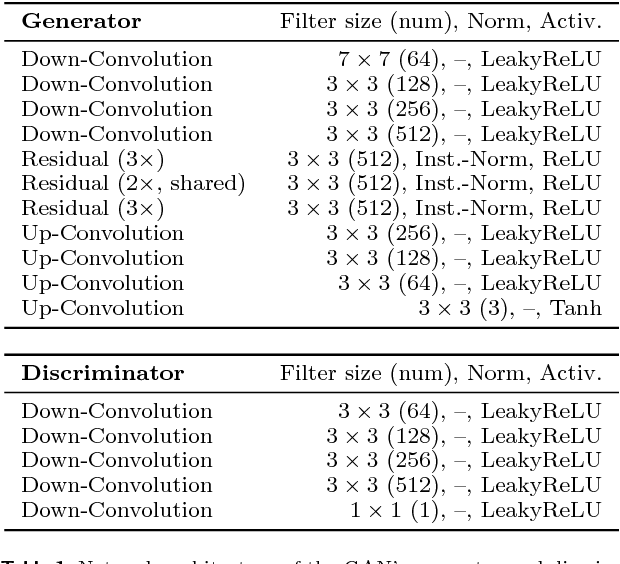

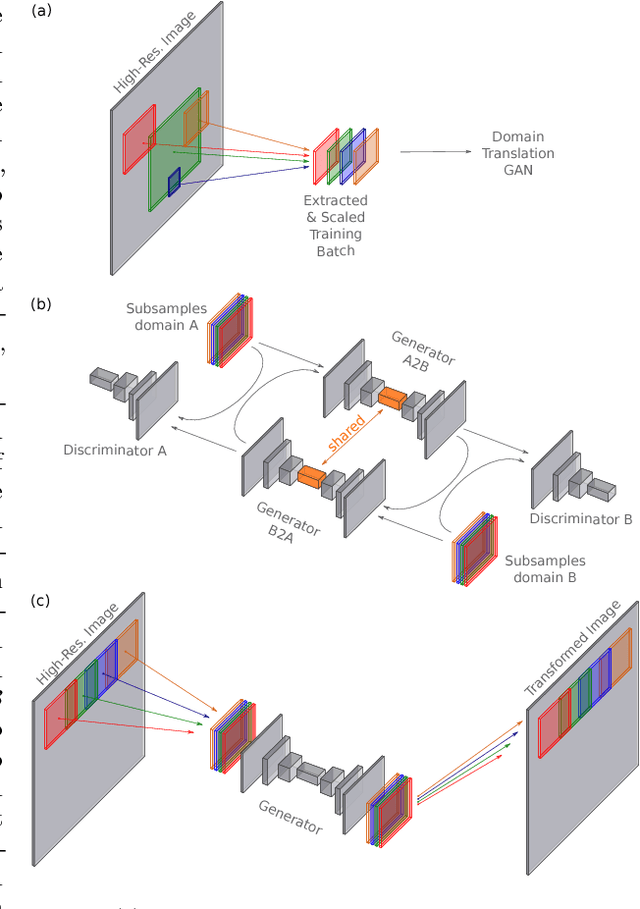

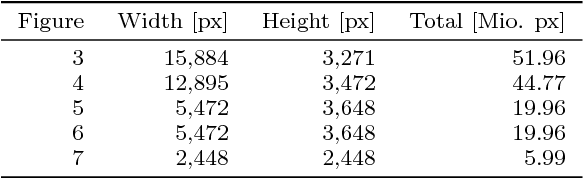

Neural networks have proven their capabilities by outperforming many other approaches on regression or classification tasks on various kinds of data. Other astonishing results have been achieved using neural nets as data generators, especially in settings of generative adversarial networks (GANs). One special application is the field of image domain translations. Here, the goal is to take an image with a certain style (e.g. a photography) and transform it into another one (e.g. a painting). If such a task is performed for unpaired training examples, the corresponding GAN setting is complex, the neural networks are large, and this leads to a high peak memory consumption during, both, training and evaluation phase. This sets a limit to the highest processable image size. We address this issue by the idea of not processing the whole image at once, but to train and evaluate the domain translation on the level of overlapping image subsamples. This new approach not only enables us to translate high-resolution images that otherwise cannot be processed by the neural network at once, but also allows us to work with comparably small neural networks and with limited hardware resources. Additionally, the number of images required for the training process is significantly reduced. We present high-quality results on images with a total resolution of up to over 50 megapixels and emonstrate that our method helps to preserve local image details while it also keeps global consistency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge