Unlocking Intrinsic Fairness in Stable Diffusion

Paper and Code

Aug 22, 2024

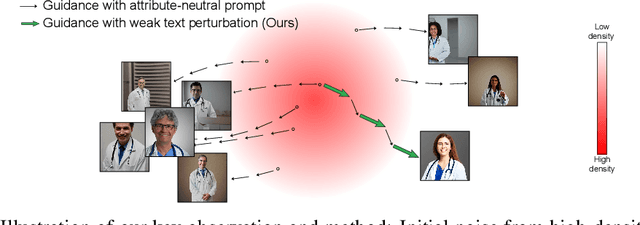

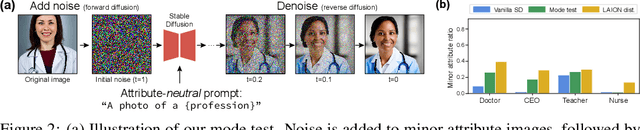

Recent text-to-image models like Stable Diffusion produce photo-realistic images but often show demographic biases. Previous debiasing methods focused on training-based approaches, failing to explore the root causes of bias and overlooking Stable Diffusion's potential for unbiased image generation. In this paper, we demonstrate that Stable Diffusion inherently possesses fairness, which can be unlocked to achieve debiased outputs. Through carefully designed experiments, we identify the excessive bonding between text prompts and the diffusion process as a key source of bias. To address this, we propose a novel approach that perturbs text conditions to unleash Stable Diffusion's intrinsic fairness. Our method effectively mitigates bias without additional tuning, while preserving image-text alignment and image quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge