Unifying Neural Learning and Symbolic Reasoning for Spinal Medical Report Generation

Paper and Code

Apr 28, 2020

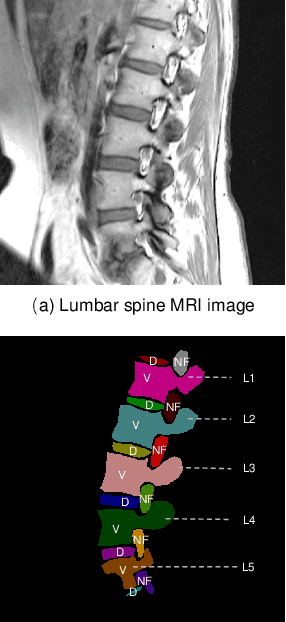

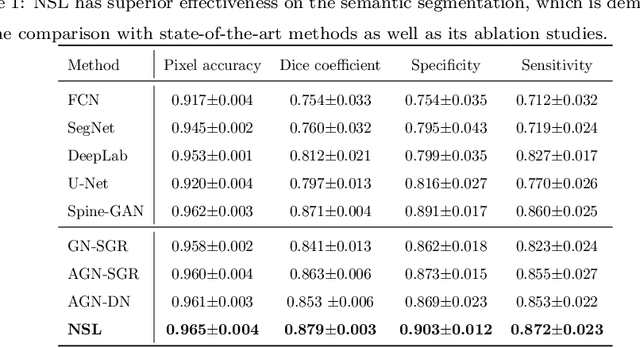

Automated medical report generation in spine radiology, i.e., given spinal medical images and directly create radiologist-level diagnosis reports to support clinical decision making, is a novel yet fundamental study in the domain of artificial intelligence in healthcare. However, it is incredibly challenging because it is an extremely complicated task that involves visual perception and high-level reasoning processes. In this paper, we propose the neural-symbolic learning (NSL) framework that performs human-like learning by unifying deep neural learning and symbolic logical reasoning for the spinal medical report generation. Generally speaking, the NSL framework firstly employs deep neural learning to imitate human visual perception for detecting abnormalities of target spinal structures. Concretely, we design an adversarial graph network that interpolates a symbolic graph reasoning module into a generative adversarial network through embedding prior domain knowledge, achieving semantic segmentation of spinal structures with high complexity and variability. NSL secondly conducts human-like symbolic logical reasoning that realizes unsupervised causal effect analysis of detected entities of abnormalities through meta-interpretive learning. NSL finally fills these discoveries of target diseases into a unified template, successfully achieving a comprehensive medical report generation. When it employed in a real-world clinical dataset, a series of empirical studies demonstrate its capacity on spinal medical report generation as well as show that our algorithm remarkably exceeds existing methods in the detection of spinal structures. These indicate its potential as a clinical tool that contributes to computer-aided diagnosis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge