Underwater Intention Recognition using Head Motion and Throat Vibration for Supernumerary Robotic Assistance

Paper and Code

Jun 08, 2023

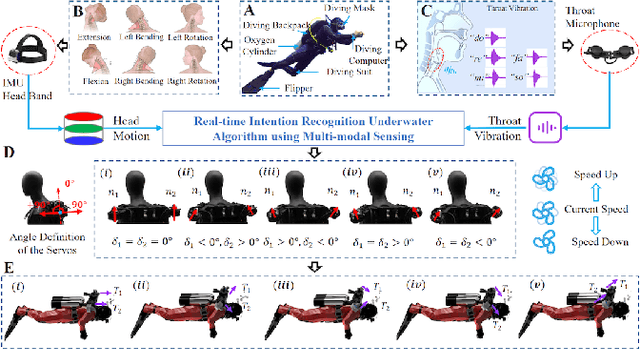

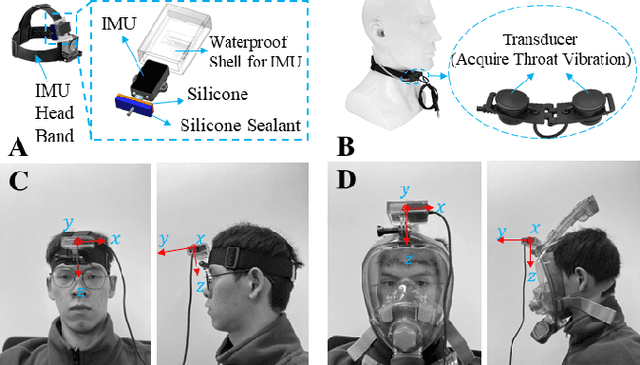

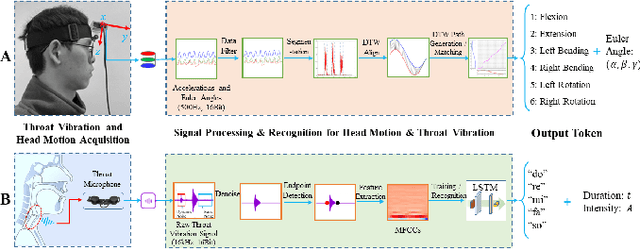

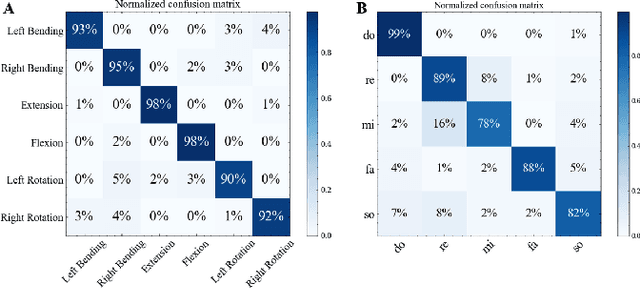

This study presents a multi-modal mechanism for recognizing human intentions while diving underwater, aiming to achieve natural human-robot interactions through an underwater superlimb for diving assistance. The underwater environment severely limits the divers' capabilities in intention expression, which becomes more challenging when they intend to operate tools while keeping control of body postures in 3D with the various diving suits and gears. The current literature is limited in underwater intention recognition, impeding the development of intelligent wearable systems for human-robot interactions underwater. Here, we present a novel solution to simultaneously detect head motion and throat vibrations under the water in a compact, wearable design. Experiment results show that using machine learning algorithms, we achieved high performance in integrating these two modalities to translate human intentions to robot control commands for an underwater superlimb system. This study's results paved the way for future development in underwater intention recognition and underwater human-robot interactions with supernumerary support.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge