Understanding the Usability Challenges of Machine Learning In High-Stakes Decision Making

Paper and Code

Mar 02, 2021

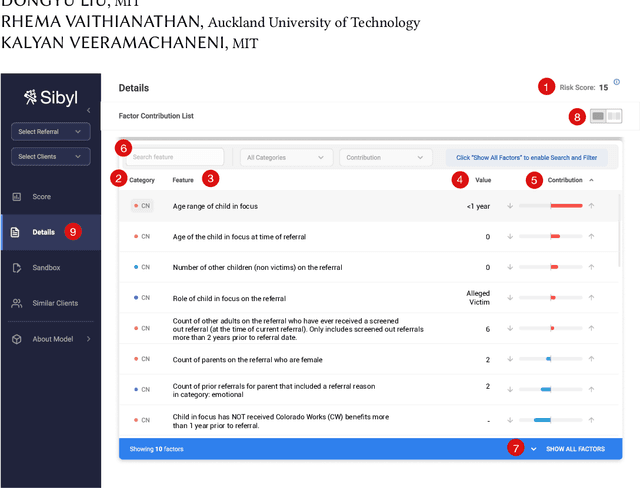

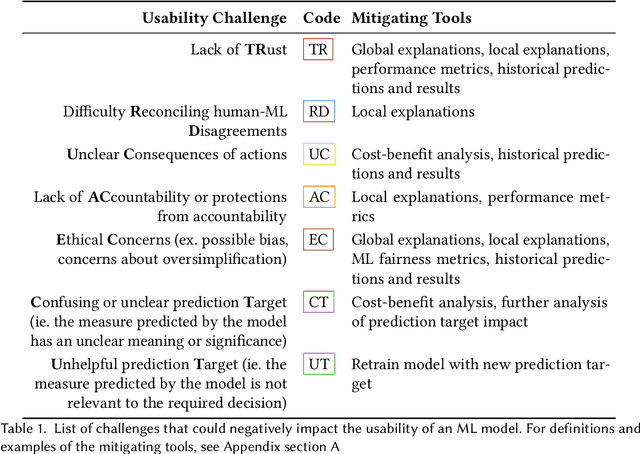

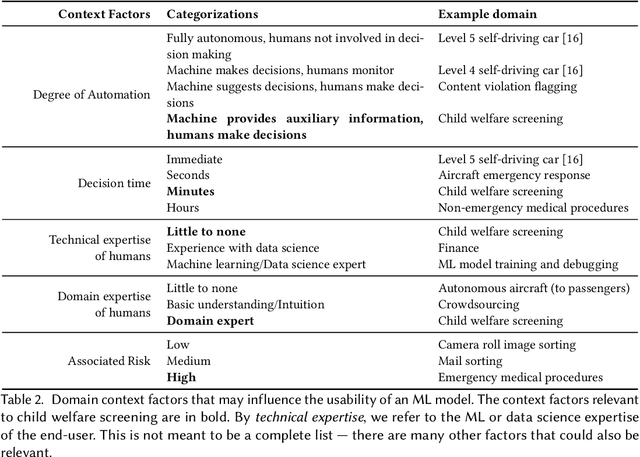

Machine learning (ML) is being applied to a diverse and ever-growing set of domains. In many cases, domain experts -- who often have no expertise in ML or data science -- are asked to use ML predictions to make high-stakes decisions. Multiple ML usability challenges can appear as result, such as lack of user trust in the model, inability to reconcile human-ML disagreement, and ethical concerns about oversimplification of complex problems to a single algorithm output. In this paper, we investigate the ML usability challenges present in the domain of child welfare screening through a series of collaborations with child welfare screeners, which included field observations, interviews, and a formal user study. Through our collaborations, we identified four key ML challenges, and honed in on one promising ML augmentation tool to address them (local factor contributions). We also composed a list of design considerations to be taken into account when developing future augmentation tools for child welfare screeners and similar domain experts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge