Understanding the impact of mistakes on background regions in crowd counting

Paper and Code

Mar 30, 2020

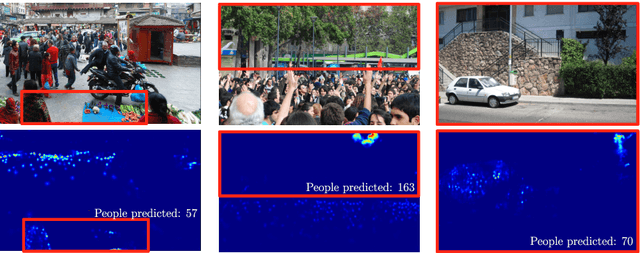

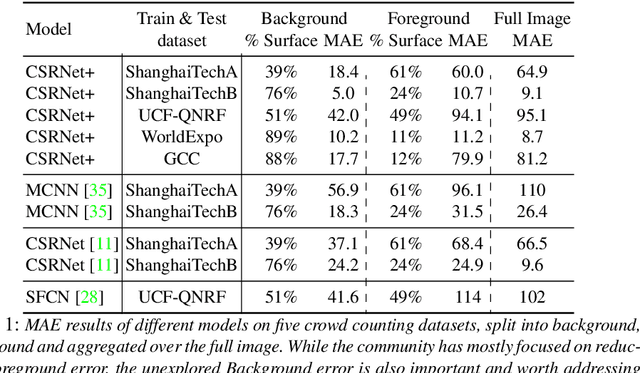

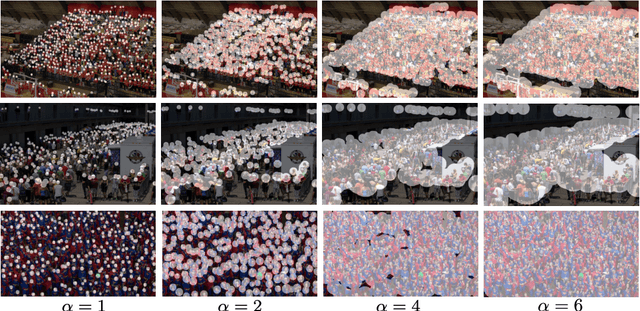

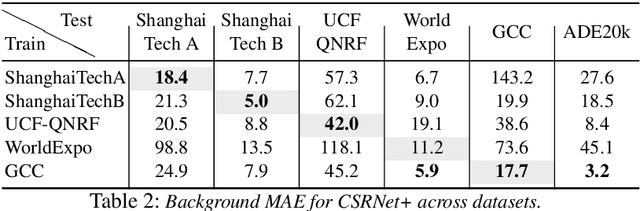

Every crowd counting researcher has likely observed their model output wrong positive predictions on image regions not containing any person. But how often do these mistakes happen? Are our models negatively affected by this? In this paper we analyze this problem in depth. In order to understand its magnitude, we present an extensive analysis on five of the most important crowd counting datasets. We present this analysis in two parts. First, we quantify the number of mistakes made by popular crowd counting approaches. Our results show that (i) mistakes on background are substantial and they are responsible for 18-49% of the total error, (ii) models do not generalize well to different kinds of backgrounds and perform poorly on completely background images, and (iii) models make many more mistakes than those captured by the standard Mean Absolute Error (MAE) metric, as counting on background compensates considerably for misses on foreground. And second, we quantify the performance change gained by helping the model better deal with this problem. We enrich a typical crowd counting network with a segmentation branch trained to suppress background predictions. This simple addition (i) reduces background error by 10-83%, (ii) reduces foreground error by up to 26% and (iii) improves overall crowd counting performance up to 20%. When compared against the literature, this simple technique achieves very competitive results on all datasets, on par with the state-of-the-art, showing the importance of tackling the background problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge