Uncertainty as a Predictor: Leveraging Self-Supervised Learning for Zero-Shot MOS Prediction

Paper and Code

Dec 25, 2023

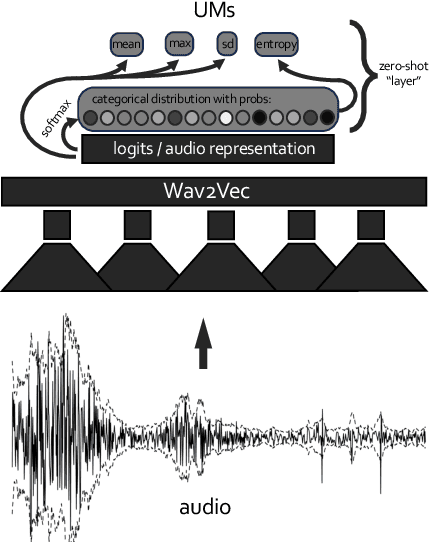

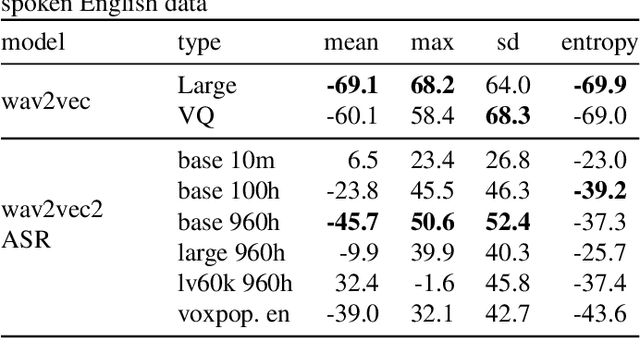

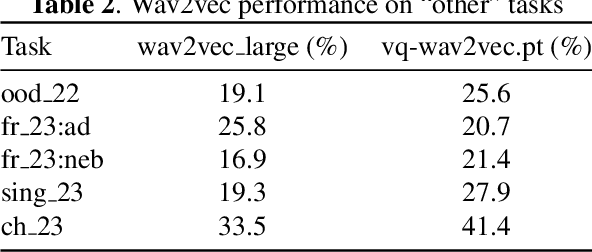

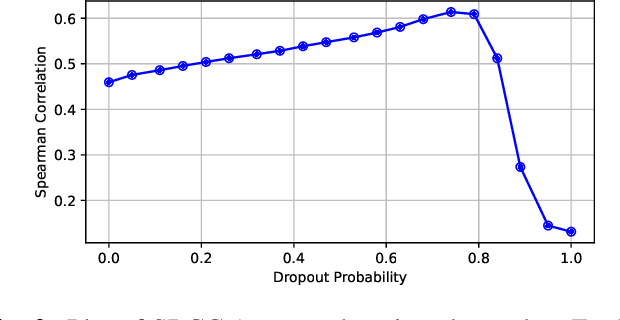

Predicting audio quality in voice synthesis and conversion systems is a critical yet challenging task, especially when traditional methods like Mean Opinion Scores (MOS) are cumbersome to collect at scale. This paper addresses the gap in efficient audio quality prediction, especially in low-resource settings where extensive MOS data from large-scale listening tests may be unavailable. We demonstrate that uncertainty measures derived from out-of-the-box pretrained self-supervised learning (SSL) models, such as wav2vec, correlate with MOS scores. These findings are based on data from the 2022 and 2023 VoiceMOS challenges. We explore the extent of this correlation across different models and language contexts, revealing insights into how inherent uncertainties in SSL models can serve as effective proxies for audio quality assessment. In particular, we show that the contrastive wav2vec models are the most performant in all settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge