UDoc-GAN: Unpaired Document Illumination Correction with Background Light Prior

Paper and Code

Oct 15, 2022

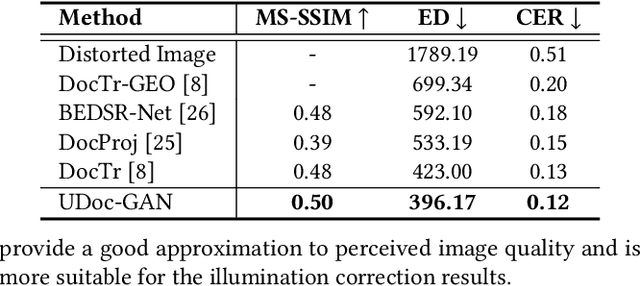

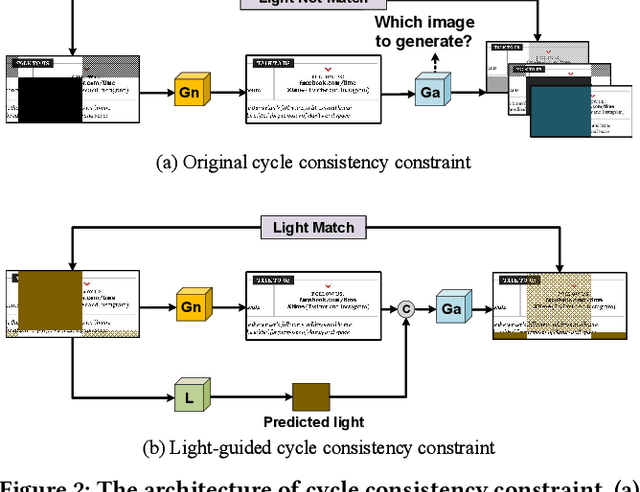

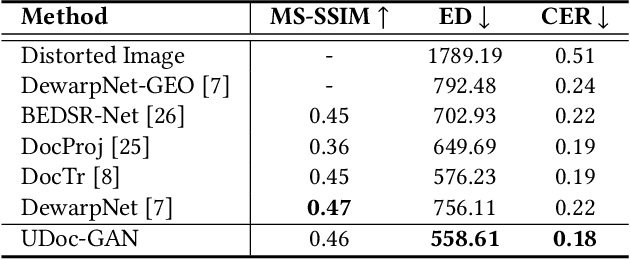

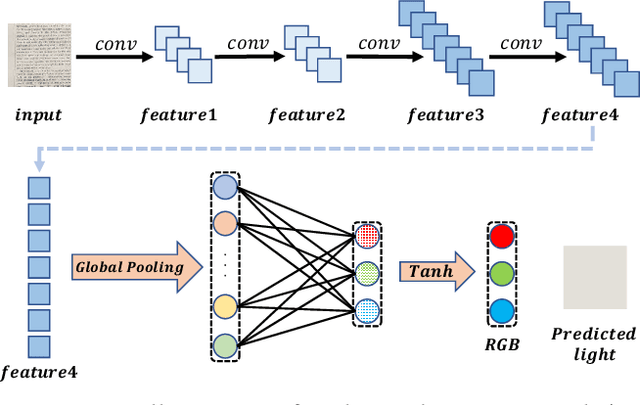

Document images captured by mobile devices are usually degraded by uncontrollable illumination, which hampers the clarity of document content. Recently, a series of research efforts have been devoted to correcting the uneven document illumination. However, existing methods rarely consider the use of ambient light information, and usually rely on paired samples including degraded and the corrected ground-truth images which are not always accessible. To this end, we propose UDoc-GAN, the first framework to address the problem of document illumination correction under the unpaired setting. Specifically, we first predict the ambient light features of the document. Then, according to the characteristics of different level of ambient lights, we re-formulate the cycle consistency constraint to learn the underlying relationship between normal and abnormal illumination domains. To prove the effectiveness of our approach, we conduct extensive experiments on DocProj dataset under the unpaired setting. Compared with the state-of-the-art approaches, our method demonstrates promising performance in terms of character error rate (CER) and edit distance (ED), together with better qualitative results for textual detail preservation. The source code is now publicly available at https://github.com/harrytea/UDoc-GAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge