TwinCL: A Twin Graph Contrastive Learning Model for Collaborative Filtering

Paper and Code

Sep 27, 2024

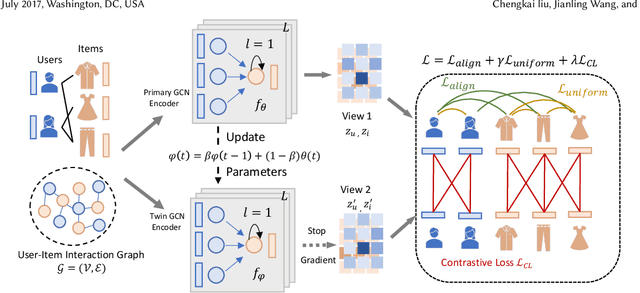

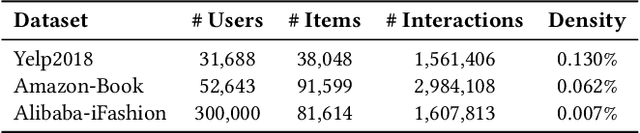

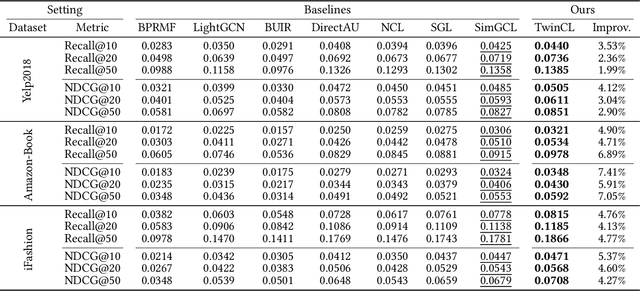

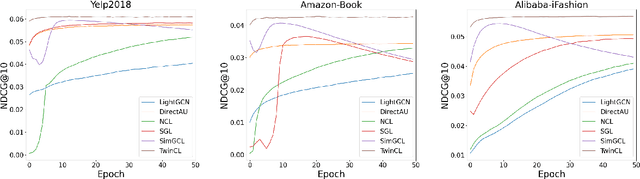

In the domain of recommendation and collaborative filtering, Graph Contrastive Learning (GCL) has become an influential approach. Nevertheless, the reasons for the effectiveness of contrastive learning are still not well understood. In this paper, we challenge the conventional use of random augmentations on graph structure or embedding space in GCL, which may disrupt the structural and semantic information inherent in Graph Neural Networks. Moreover, fixed-rate data augmentation proves to be less effective compared to augmentation with an adaptive rate. In the initial training phases, significant perturbations are more suitable, while as the training approaches convergence, milder perturbations yield better results. We introduce a twin encoder in place of random augmentations, demonstrating the redundancy of traditional augmentation techniques. The twin encoder updating mechanism ensures the generation of more diverse contrastive views in the early stages, transitioning to views with greater similarity as training progresses. In addition, we investigate the learned representations from the perspective of alignment and uniformity on a hypersphere to optimize more efficiently. Our proposed Twin Graph Contrastive Learning model -- TwinCL -- aligns positive pairs of user and item embeddings and the representations from the twin encoder while maintaining the uniformity of the embeddings on the hypersphere. Our theoretical analysis and experimental results show that the proposed model optimizing alignment and uniformity with the twin encoder contributes to better recommendation accuracy and training efficiency performance. In comprehensive experiments on three public datasets, our proposed TwinCL achieves an average improvement of 5.6% (NDCG@10) in recommendation accuracy with faster training speed, while effectively mitigating popularity bias.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge