TTAPS: Test-Time Adaption by Aligning Prototypes using Self-Supervision

Paper and Code

May 18, 2022

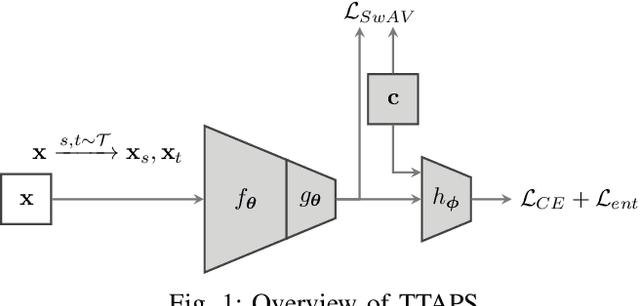

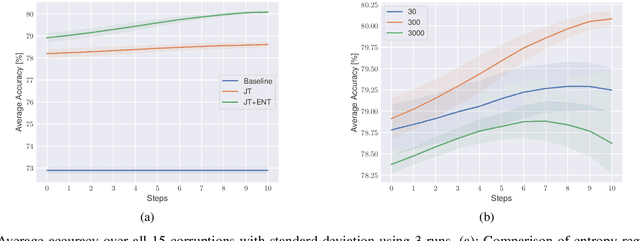

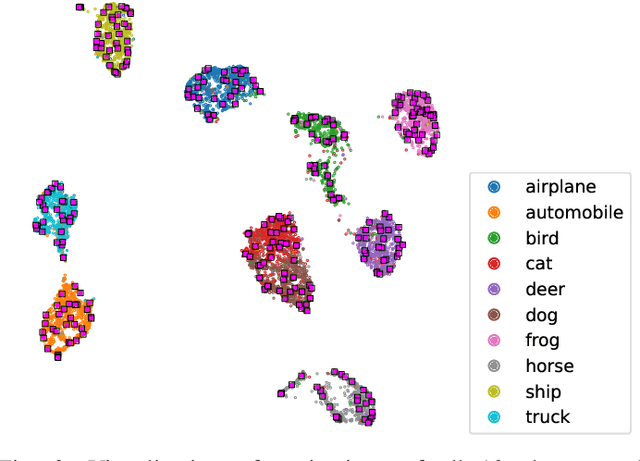

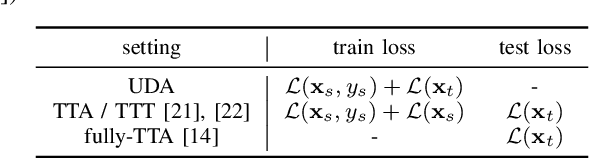

Nowadays, deep neural networks outperform humans in many tasks. However, if the input distribution drifts away from the one used in training, their performance drops significantly. Recently published research has shown that adapting the model parameters to the test sample can mitigate this performance degradation. In this paper, we therefore propose a novel modification of the self-supervised training algorithm SwAV that adds the ability to adapt to single test samples. Using the provided prototypes of SwAV and our derived test-time loss, we align the representation of unseen test samples with the self-supervised learned prototypes. We show the success of our method on the common benchmark dataset CIFAR10-C.

* Accepted at International Joint Conference on Neural Networks 2022

(IJCNN)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge