TSMixer: An all-MLP Architecture for Time Series Forecasting

Paper and Code

Mar 10, 2023

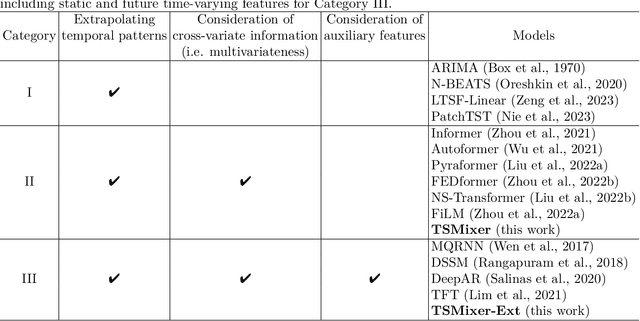

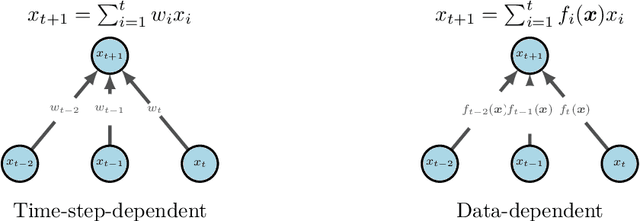

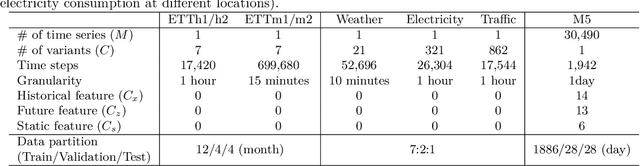

Real-world time-series datasets are often multivariate with complex dynamics. Commonly-used high capacity architectures like recurrent- or attention-based sequential models have become popular. However, recent work demonstrates that simple univariate linear models can outperform those deep alternatives. In this paper, we investigate the capabilities of linear models for time-series forecasting and present Time-Series Mixer (TSMixer), an architecture designed by stacking multi-layer perceptrons (MLPs). TSMixer is based on mixing operations along time and feature dimensions to extract information efficiently. On popular academic benchmarks, the simple-to-implement TSMixer is comparable to specialized state-of-the-art models that leverage the inductive biases of specific benchmarks. On the challenging and large scale M5 benchmark, a real-world retail dataset, TSMixer demonstrates superior performance compared to the state-of-the-art alternatives. Our results underline the importance of efficiently utilizing cross-variate and auxiliary information for improving the performance of time series forecasting. The design paradigms utilized in TSMixer are expected to open new horizons for deep learning-based time series forecasting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge