TruVR: Trustworthy Cybersickness Detection using Explainable Machine Learning

Paper and Code

Sep 12, 2022

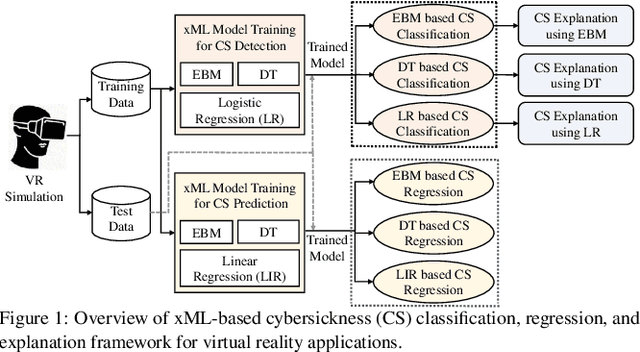

Cybersickness can be characterized by nausea, vertigo, headache, eye strain, and other discomforts when using virtual reality (VR) systems. The previously reported machine learning (ML) and deep learning (DL) algorithms for detecting (classification) and predicting (regression) VR cybersickness use black-box models; thus, they lack explainability. Moreover, VR sensors generate a massive amount of data, resulting in complex and large models. Therefore, having inherent explainability in cybersickness detection models can significantly improve the model's trustworthiness and provide insight into why and how the ML/DL model arrived at a specific decision. To address this issue, we present three explainable machine learning (xML) models to detect and predict cybersickness: 1) explainable boosting machine (EBM), 2) decision tree (DT), and 3) logistic regression (LR). We evaluate xML-based models with publicly available physiological and gameplay datasets for cybersickness. The results show that the EBM can detect cybersickness with an accuracy of 99.75% and 94.10% for the physiological and gameplay datasets, respectively. On the other hand, while predicting the cybersickness, EBM resulted in a Root Mean Square Error (RMSE) of 0.071 for the physiological dataset and 0.27 for the gameplay dataset. Furthermore, the EBM-based global explanation reveals exposure length, rotation, and acceleration as key features causing cybersickness in the gameplay dataset. In contrast, galvanic skin responses and heart rate are most significant in the physiological dataset. Our results also suggest that EBM-based local explanation can identify cybersickness-causing factors for individual samples. We believe the proposed xML-based cybersickness detection method can help future researchers understand, analyze, and design simpler cybersickness detection and reduction models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge