Trusted Neural Networks for Safety-Constrained Autonomous Control

Paper and Code

May 18, 2018

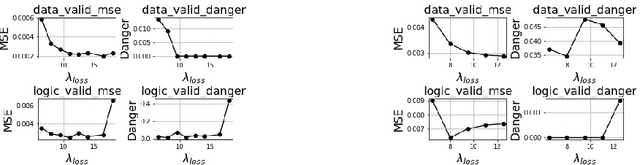

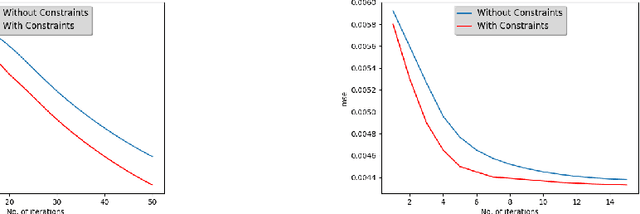

We propose Trusted Neural Network (TNN) models, which are deep neural network models that satisfy safety constraints critical to the application domain. We investigate different mechanisms for incorporating rule-based knowledge in the form of first-order logic constraints into a TNN model, where rules that encode safety are accompanied by weights indicating their relative importance. This framework allows the TNN model to learn from knowledge available in form of data as well as logical rules. We propose multiple approaches for solving this problem: (a) a multi-headed model structure that allows trade-off between satisfying logical constraints and fitting training data in a unified training framework, and (b) creating a constrained optimization problem and solving it in dual formulation by posing a new constrained loss function and using a proximal gradient descent algorithm. We demonstrate the efficacy of our TNN framework through experiments using the open-source TORCS~\cite{BernhardCAA15} 3D simulator for self-driving cars. Experiments using our first approach of a multi-headed TNN model, on a dataset generated by a customized version of TORCS, show that (1) adding safety constraints to a neural network model results in increased performance and safety, and (2) the improvement increases with increasing importance of the safety constraints. Experiments were also performed using the second approach of proximal algorithm for constrained optimization --- they demonstrate how the proposed method ensures that (1) the overall TNN model satisfies the constraints even when the training data violates some of the constraints, and (2) the proximal gradient descent algorithm on the constrained objective converges faster than the unconstrained version.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge