Transformers Are Better Than Humans at Identifying Generated Text

Paper and Code

Sep 29, 2020

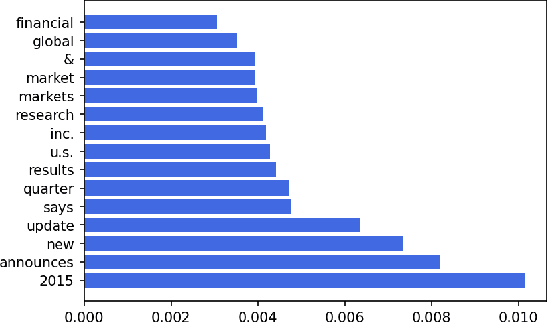

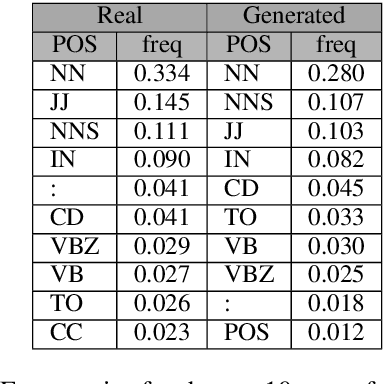

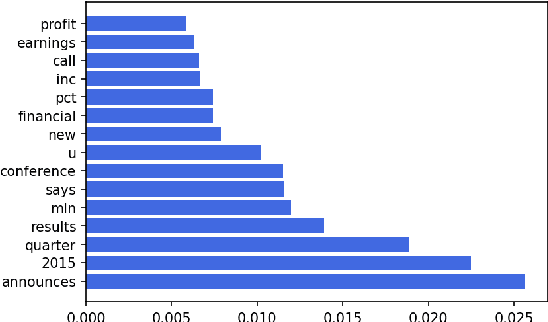

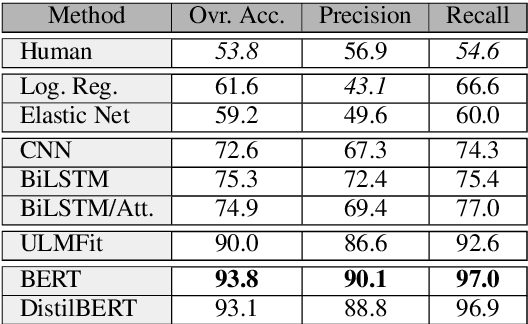

Fake information spread via the internet and social media influences public opinion and user activity. Generative models enable fake content to be generated faster and more cheaply than had previously been possible. This paper examines the problem of identifying fake content generated by lightweight deep learning models. A dataset containing human and machine-generated headlines was created and a user study indicated that humans were only able to identify the fake headlines in 45.3% of the cases. However, the most accurate automatic approach, transformers, achieved an accuracy of 94%, indicating that content generated from language models can be filtered out accurately.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge