Trajectory Inspection: A Method for Iterative Clinician-Driven Design of Reinforcement Learning Studies

Paper and Code

Oct 08, 2020

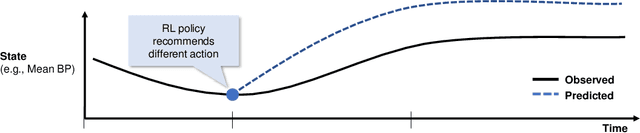

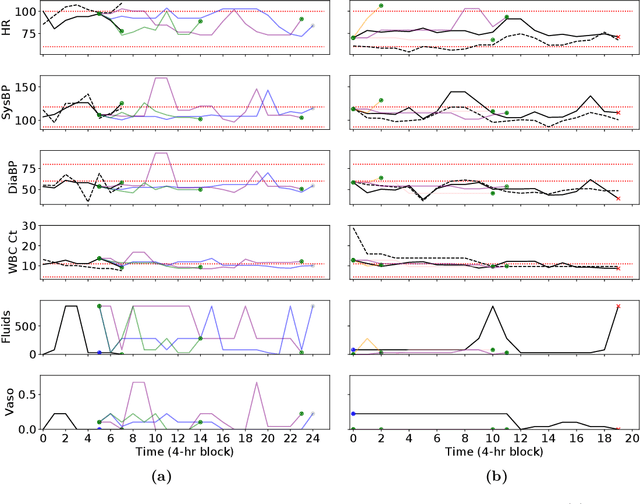

Treatment policies learned via reinforcement learning (RL) from observational health data are sensitive to subtle choices in study design. We highlight a simple approach, trajectory inspection, to bring clinicians into an iterative design process for model-based RL studies. We inspect trajectories where the model recommends unexpectedly aggressive treatments or believes its recommendations would lead to much more positive outcomes. Then, we examine clinical trajectories simulated with the learned model and policy alongside the actual hospital course to uncover possible modeling issues. To demonstrate that this approach yields insights, we apply it to recent work on RL for inpatient sepsis management. We find that a design choice around maximum trajectory length leads to a model bias towards discharge, that the RL policy preference for high vasopressor doses may be linked to small sample sizes, and that the model has a clinically implausible expectation of discharge without weaning off vasopressors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge