Training Efficient Network Architecture and Weights via Direct Sparsity Control

Paper and Code

Feb 11, 2020

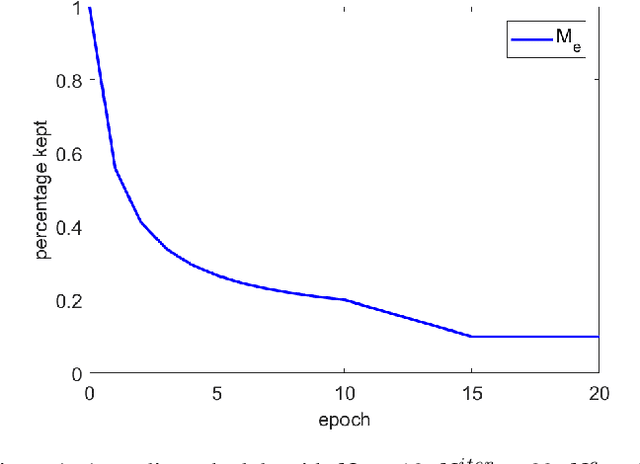

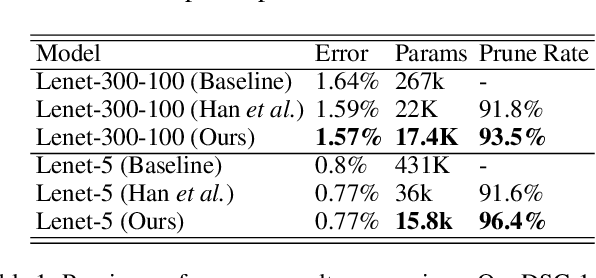

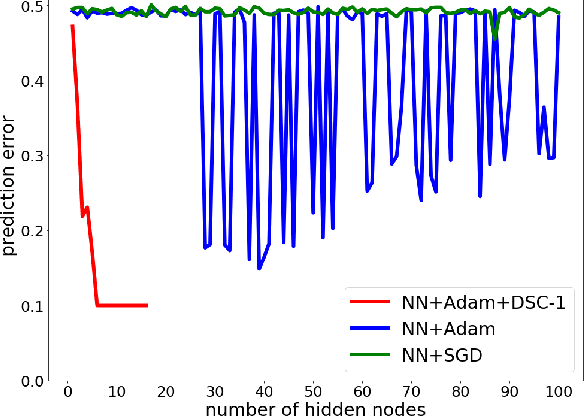

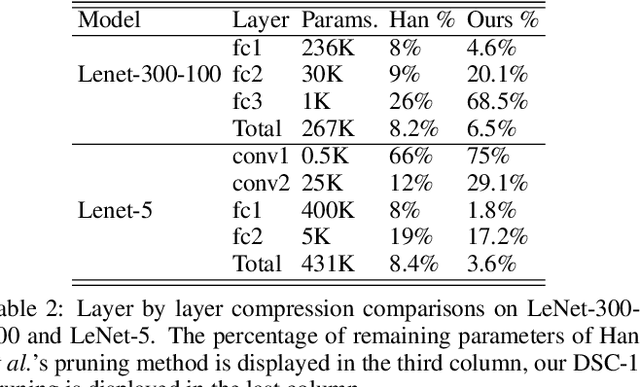

Artificial neural networks (ANNs) especially deep convolutional networks are very popular these days and have been proved to successfully offer quite reliable solutions to many vision problems. However, the use of deep neural networks is widely impeded by their intensive computational and memory cost. In this paper, we propose a novel efficient network pruning method that is suitable for both non-structured and structured channel-level pruning. Our proposed method tightens a sparsity constraint by gradually removing network parameters or filter channels based on a criterion and a schedule. The attractive fact that the network size keeps dropping throughout the iterations makes it suitable for the pruning of any untrained or pre-trained network. Because our method uses a L0 constraint instead of the L1 penalty, it does not introduce any bias in the training parameters or filter channels. Furthermore, the L0 constraint makes it easy to directly specify the desired sparsity level during the network pruning process. Finally, experimental validation on synthetic and real datasets both show that the proposed method obtains better or competitive performance compared to other states of art network pruning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge