Training Dependency Parsers with Partial Annotation

Paper and Code

Sep 29, 2016

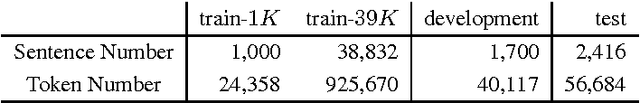

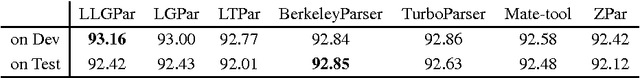

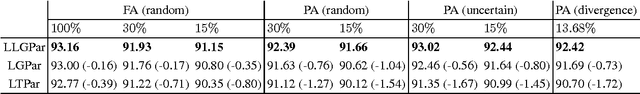

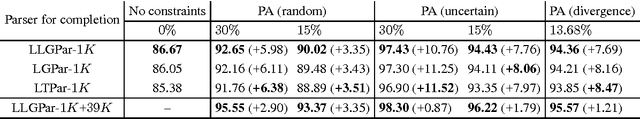

Recently, these has been a surge on studying how to obtain partially annotated data for model supervision. However, there still lacks a systematic study on how to train statistical models with partial annotation (PA). Taking dependency parsing as our case study, this paper describes and compares two straightforward approaches for three mainstream dependency parsers. The first approach is previously proposed to directly train a log-linear graph-based parser (LLGPar) with PA based on a forest-based objective. This work for the first time proposes the second approach to directly training a linear graph-based parse (LGPar) and a linear transition-based parser (LTPar) with PA based on the idea of constrained decoding. We conduct extensive experiments on Penn Treebank under three different settings for simulating PA, i.e., random dependencies, most uncertain dependencies, and dependencies with divergent outputs from the three parsers. The results show that LLGPar is most effective in learning from PA and LTPar lags behind the graph-based counterparts by large margin. Moreover, LGPar and LTPar can achieve best performance by using LLGPar to complete PA into full annotation (FA).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge