Training custom modality-specific U-Net models with weak localizations for improved Tuberculosis segmentation and localization

Paper and Code

Mar 01, 2021

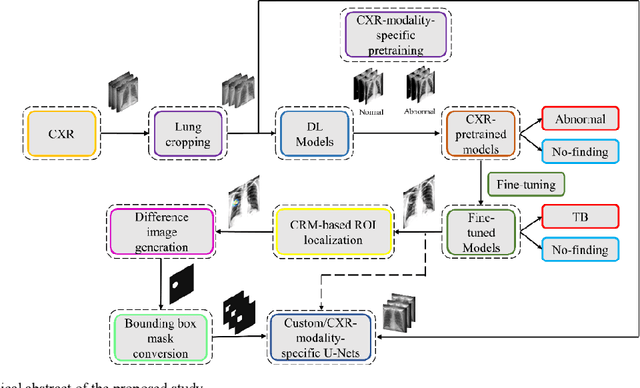

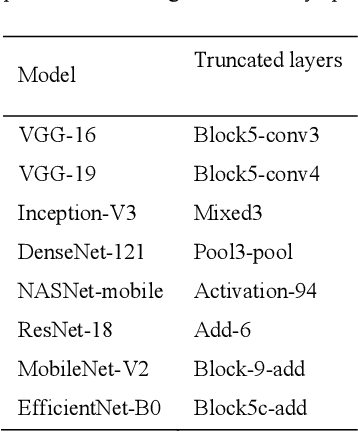

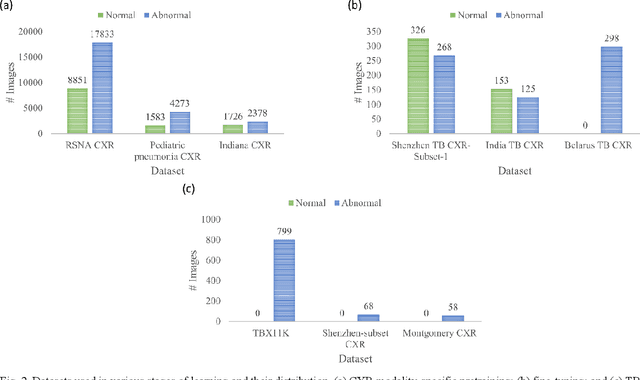

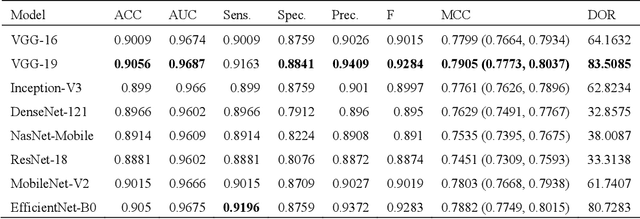

Deep learning (DL) has drawn tremendous attention in object localization and recognition for both natural and medical images. U-Net segmentation models have demonstrated superior performance compared to conventional hand-crafted feature-based methods. Medical image modality-specific DL models are better at transferring domain knowledge to a relevant target task than those that are pretrained on stock photography images. This helps improve model adaptation, generalization, and class-specific region of interest (ROI) localization. In this study, we train chest X-ray (CXR) modality-specific U-Nets and other state-of-the-art U-Net models for semantic segmentation of tuberculosis (TB)-consistent findings. Automated segmentation of such manifestations could help radiologists reduce errors and supplement decision-making while improving patient care and productivity. Our approach uses the publicly available TBX11K CXR dataset with weak TB annotations, typically provided as bounding boxes, to train a set of U-Net models. Next, we improve the results by augmenting the training data with weak localizations, post-processed into an ROI mask, from a DL classifier that is trained to classify CXRs as showing normal lungs or suspected TB manifestations. Test data are individually derived from the TBX11K CXR training distribution and other cross-institutional collections including the Shenzhen TB and Montgomery TB CXR datasets. We observe that our augmented training strategy helped the CXR modality-specific U-Net models achieve superior performance with test data derived from the TBX11K CXR training distribution as well as from cross-institutional collections (p < 0.05).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge