Training cascaded networks for speeded decisions using a temporal-difference loss

Paper and Code

Feb 19, 2021

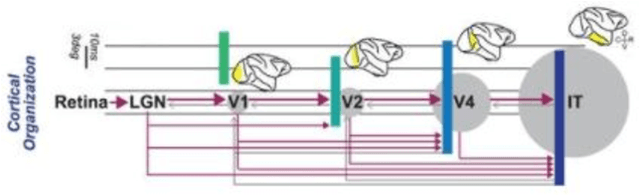

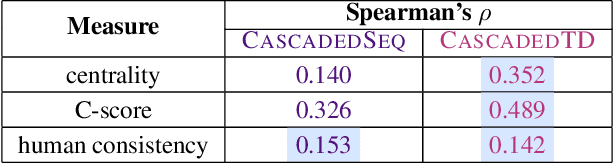

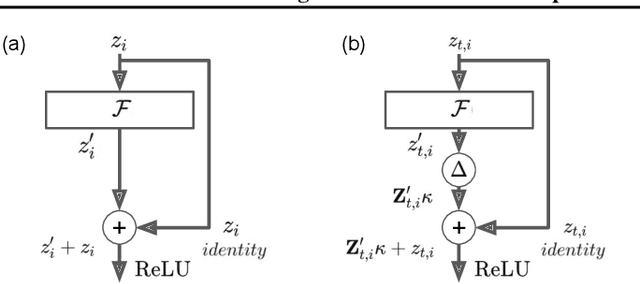

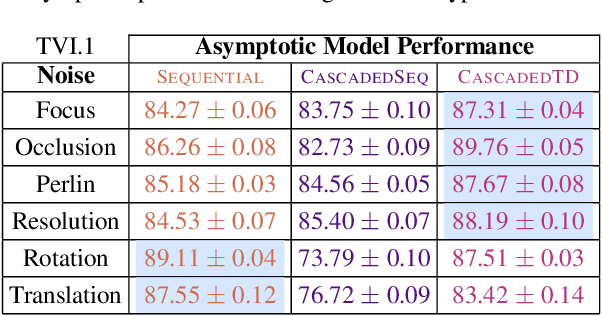

Although deep feedforward neural networks share some characteristics with the primate visual system, a key distinction is their dynamics. Deep nets typically operate in sequential stages wherein each layer fully completes its computation before processing begins in subsequent layers. In contrast, biological systems have cascaded dynamics: information propagates from neurons at all layers in parallel but transmission is gradual over time. In our work, we construct a cascaded ResNet by introducing a propagation delay into each residual block and updating all layers in parallel in a stateful manner. Because information transmitted through skip connections avoids delays, the functional depth of the architecture increases over time and yields a trade off between processing speed and accuracy. We introduce a temporal-difference (TD) training loss that achieves a strictly superior speed accuracy profile over standard losses. The CascadedTD model has intriguing properties, including: typical instances are classified more rapidly than atypical instances; CascadedTD is more robust to both persistent and transient noise than is a conventional ResNet; and the time-varying output trace of CascadedTD provides a signal that can be used by `meta-cognitive' models for OOD detection and to determine when to terminate processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge