Towards Rapid and Robust Adversarial Training with One-Step Attacks

Paper and Code

Mar 17, 2020

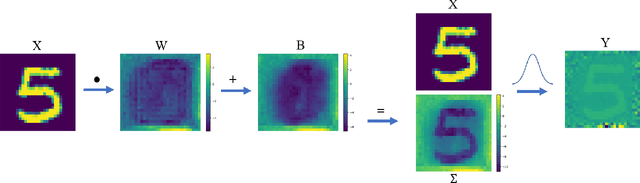

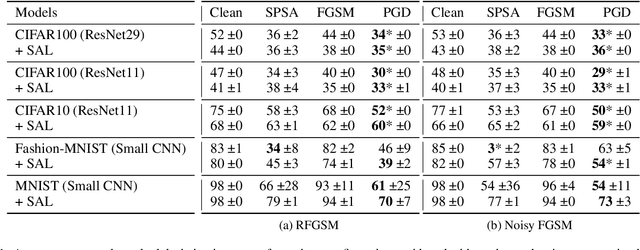

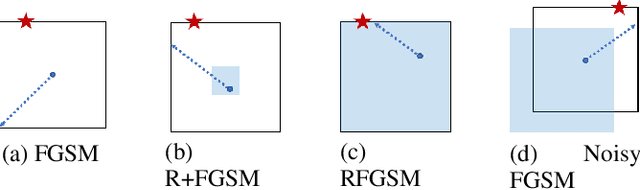

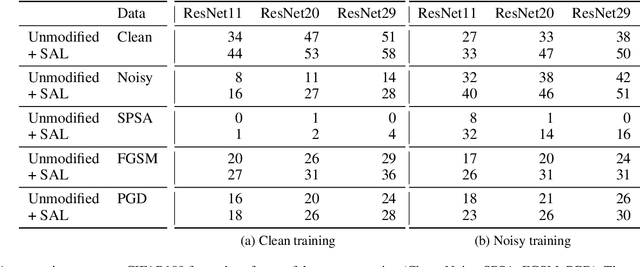

Adversarial training is the most successful empirical method for increasing the robustness of neural networks against adversarial attacks. However, the most effective approaches, like training with Projected Gradient Descent (PGD) are accompanied by high computational complexity. In this paper, we present two ideas that, in combination, enable adversarial training with the computationally less expensive Fast Gradient Sign Method (FGSM). First, we add uniform noise to the initial data point of the FGSM attack, which creates a wider variety of adversaries, thus prohibiting overfitting to one particular perturbation bound. Further, we add a learnable regularization step prior to the neural network, which we call Pixelwise Noise Injection Layer (PNIL). Inputs propagated trough the PNIL are resampled from a learned Gaussian distribution. The regularization induced by the PNIL prevents the model form learning to obfuscate its gradients, a factor that hindered prior approaches from successfully applying one-step methods for adversarial training. We show that noise injection in conjunction with FGSM-based adversarial training achieves comparable results to adversarial training with PGD while being considerably faster. Moreover, we outperform PGD-based adversarial training by combining noise injection and PNIL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge