Towards End-to-End Audio-Sheet-Music Retrieval

Paper and Code

Dec 15, 2016

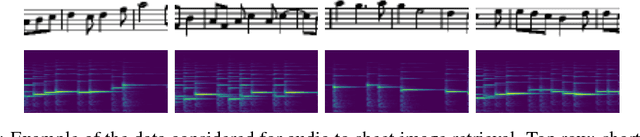

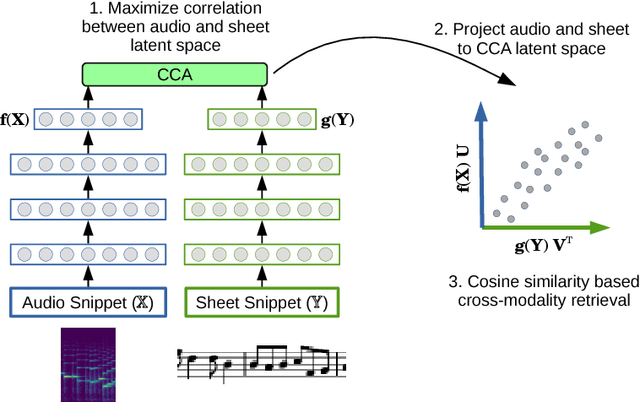

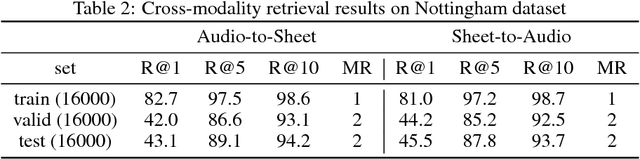

This paper demonstrates the feasibility of learning to retrieve short snippets of sheet music (images) when given a short query excerpt of music (audio) -- and vice versa --, without any symbolic representation of music or scores. This would be highly useful in many content-based musical retrieval scenarios. Our approach is based on Deep Canonical Correlation Analysis (DCCA) and learns correlated latent spaces allowing for cross-modality retrieval in both directions. Initial experiments with relatively simple monophonic music show promising results.

* In NIPS 2016 End-to-end Learning for Speech and Audio Processing

Workshop, Barcelona, Spain

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge