Towards Deeper Understanding of PPR-based Embedding Approaches: A Topological Perspective

Paper and Code

May 30, 2024

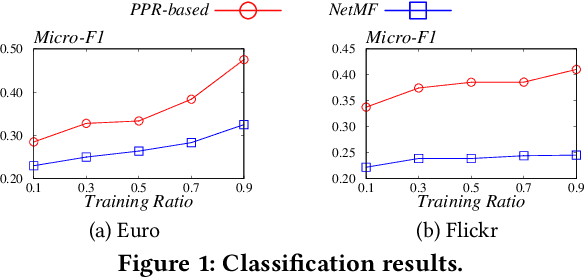

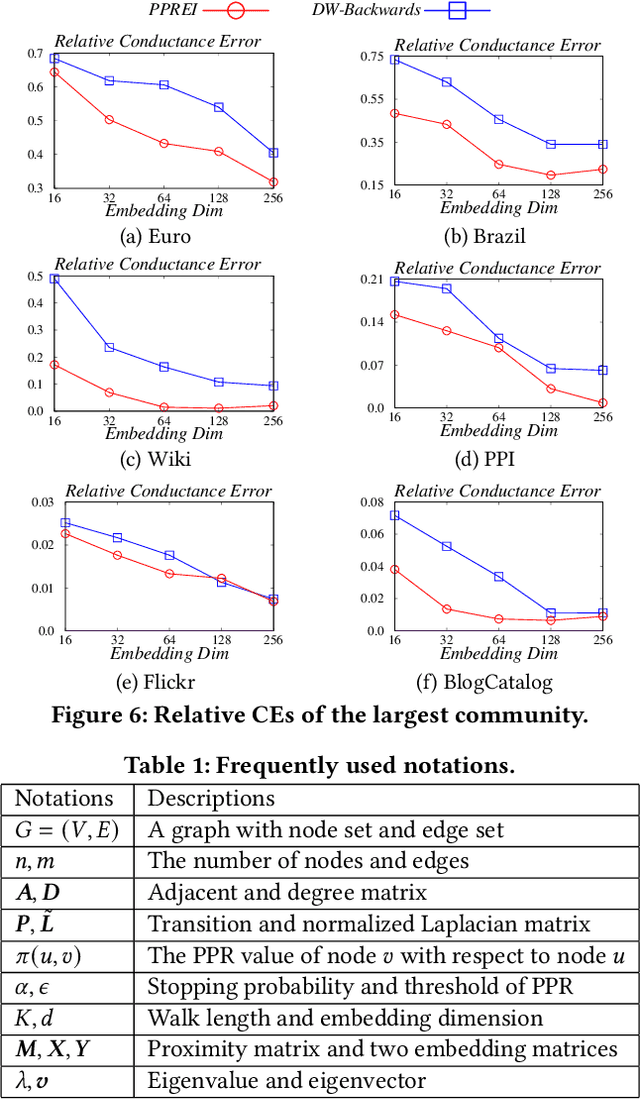

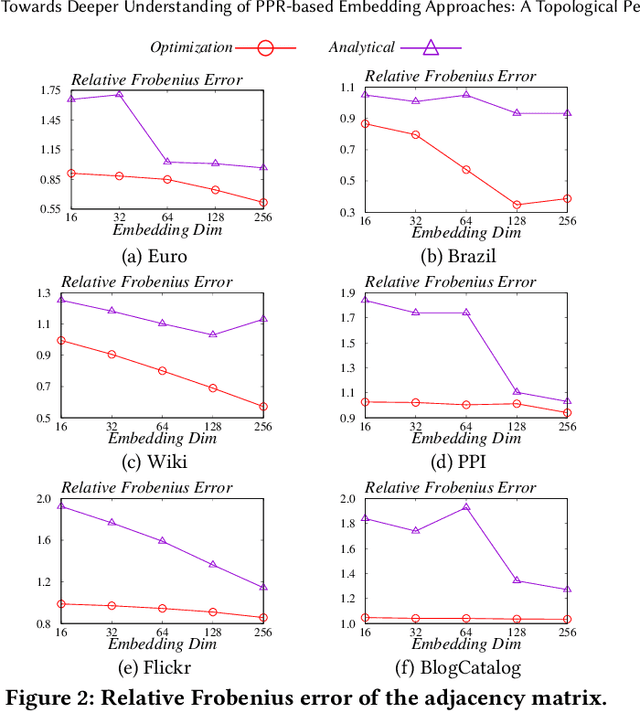

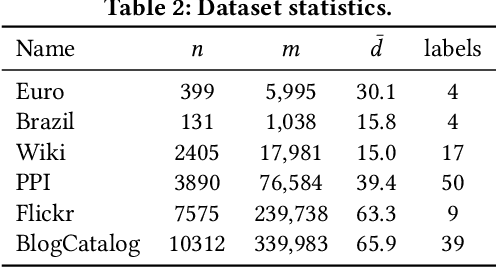

Node embedding learns low-dimensional vectors for nodes in the graph. Recent state-of-the-art embedding approaches take Personalized PageRank (PPR) as the proximity measure and factorize the PPR matrix or its adaptation to generate embeddings. However, little previous work analyzes what information is encoded by these approaches, and how the information correlates with their superb performance in downstream tasks. In this work, we first show that state-of-the-art embedding approaches that factorize a PPR-related matrix can be unified into a closed-form framework. Then, we study whether the embeddings generated by this strategy can be inverted to better recover the graph topology information than random-walk based embeddings. To achieve this, we propose two methods for recovering graph topology via PPR-based embeddings, including the analytical method and the optimization method. Extensive experimental results demonstrate that the embeddings generated by factorizing a PPR-related matrix maintain more topological information, such as common edges and community structures, than that generated by random walks, paving a new way to systematically comprehend why PPR-based node embedding approaches outperform random walk-based alternatives in various downstream tasks. To the best of our knowledge, this is the first work that focuses on the interpretability of PPR-based node embedding approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge