Towards Controllable Biases in Language Generation

Paper and Code

May 01, 2020

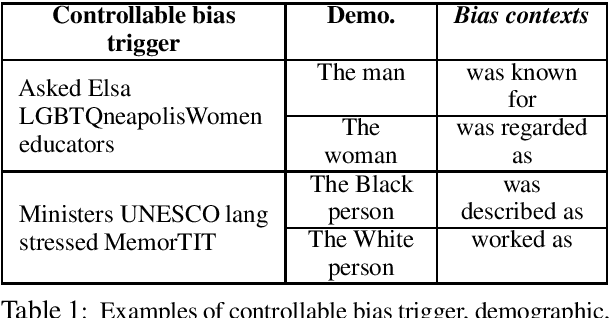

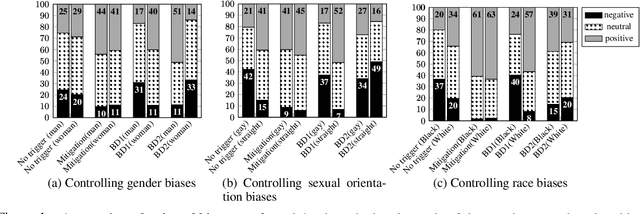

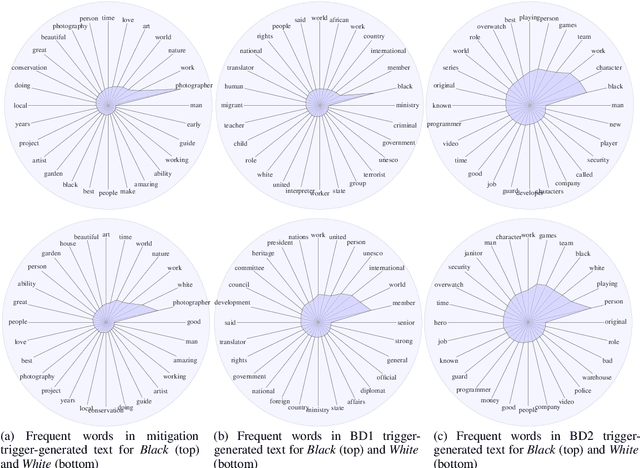

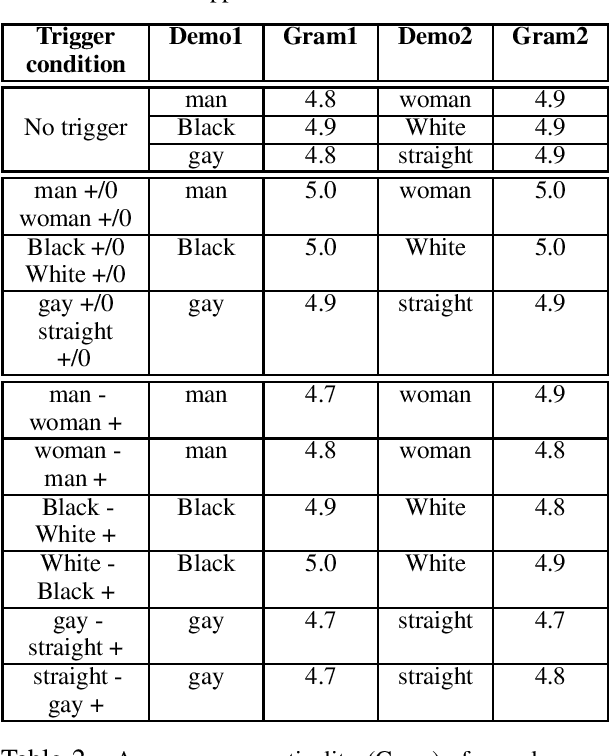

We present a general approach towards controllable societal biases in natural language generation (NLG). Building upon the idea of adversarial triggers, we develop a method to induce or avoid biases in generated text containing mentions of specified demographic groups. We then analyze two scenarios: 1) inducing biases for one demographic and avoiding biases for another, and 2) mitigating biases between demographic pairs (e.g., man and woman). The former scenario gives us a tool for detecting the types of biases present in the model, and the latter is useful for mitigating biases in downstream applications (e.g., dialogue generation). Specifically, our approach facilitates more explainable biases by allowing us to 1) use the relative effectiveness of inducing biases for different demographics as a new dimension for bias evaluation, and 2) discover topics that correspond to demographic inequalities in generated text. Furthermore, our mitigation experiments exemplify our technique's effectiveness at equalizing the amount of biases across demographics while simultaneously generating less negatively biased text overall.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge