Towards Computing an Optimal Abstraction for Structural Causal Models

Paper and Code

Aug 01, 2022

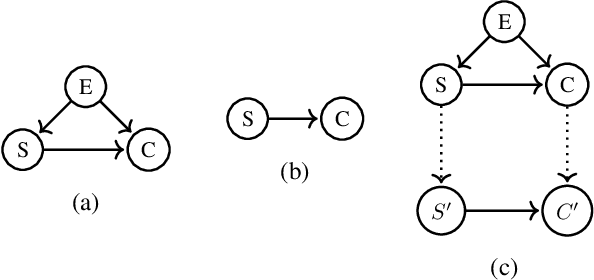

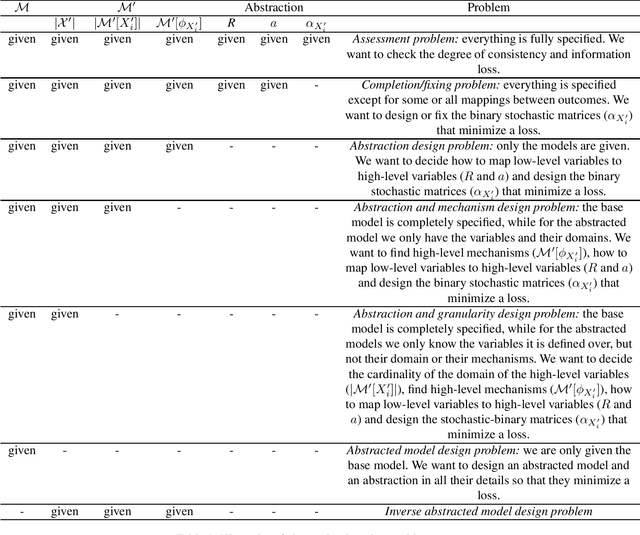

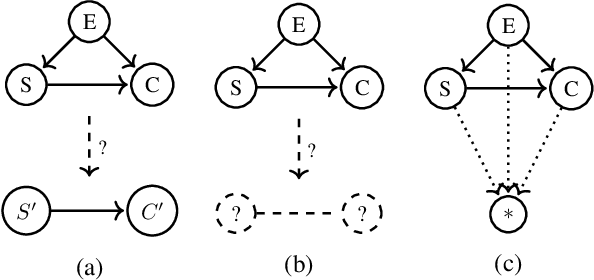

Working with causal models at different levels of abstraction is an important feature of science. Existing work has already considered the problem of expressing formally the relation of abstraction between causal models. In this paper, we focus on the problem of learning abstractions. We start by defining the learning problem formally in terms of the optimization of a standard measure of consistency. We then point out the limitation of this approach, and we suggest extending the objective function with a term accounting for information loss. We suggest a concrete measure of information loss, and we illustrate its contribution to learning new abstractions.

* 6 pages, 5 pages appendix, 2 figures Submitted to Causal

Representation Learning workshop at the 38th Conference on Uncertainty in

Artificial Intelligence (UAI CRL 2022)

View paper on

OpenReview

OpenReview

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge