Toward Robustness and Privacy in Federated Learning: Experimenting with Local and Central Differential Privacy

Paper and Code

Sep 08, 2020

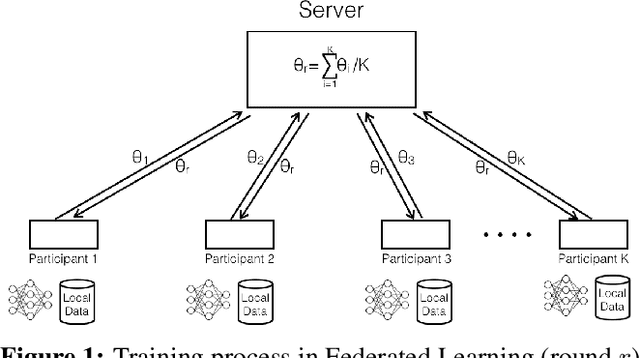

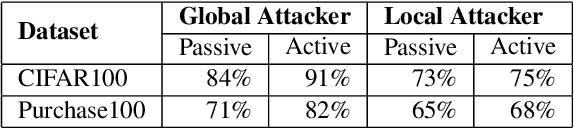

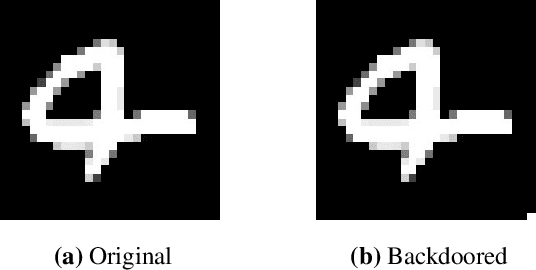

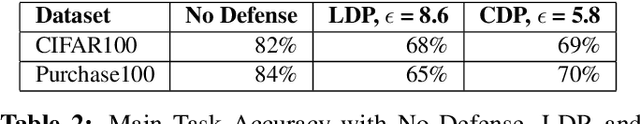

Federated Learning (FL) allows multiple participants to collaboratively train machine learning models by keeping their datasets local and exchanging model updates. Recent work has highlighted weaknesses related to robustness and privacy in FL, including backdoor, membership and property inference attacks. In this paper, we investigate whether and how Differential Privacy (DP) can be used to defend against attacks targeting both robustness and privacy in FL. To this end, we present a first-of-its-kind experimental evaluation of Local and Central Differential Privacy (LDP/CDP), assessing their feasibility and effectiveness. We show that both LDP and CDP do defend against backdoor attacks, with varying levels of protection and utility, and overall more effectively than non-DP defenses. They also mitigate white-box membership inference attacks, which our work is the first to show. Neither, however, defend against property inference attacks, prompting the need for further research in this space. Overall, our work also provides a re-usable measurement framework to quantify the trade-offs between robustness/privacy and utility in differentially private FL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge