Threshold-Based Data Exclusion Approach for Energy-Efficient Federated Edge Learning

Paper and Code

Mar 30, 2021

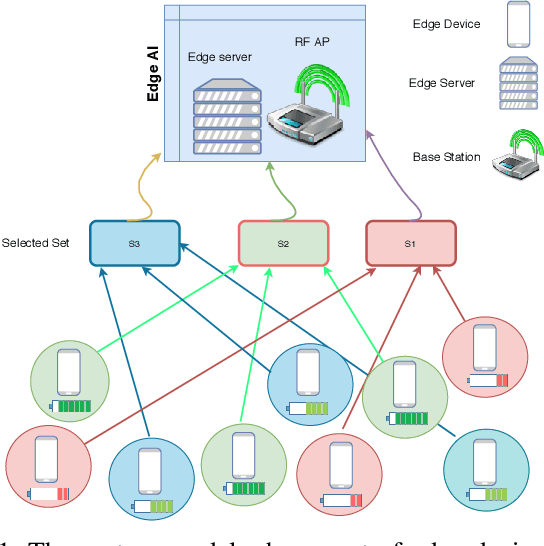

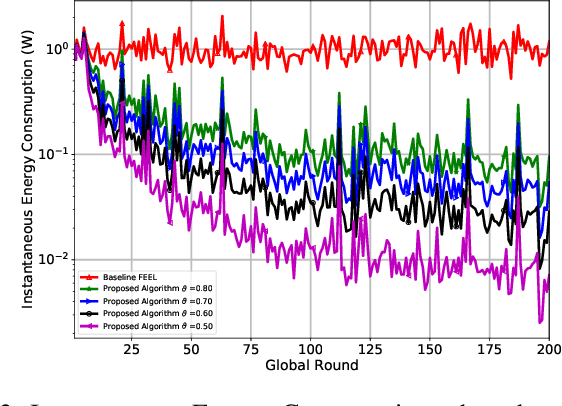

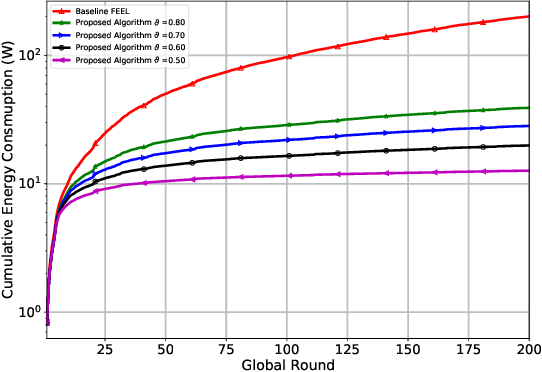

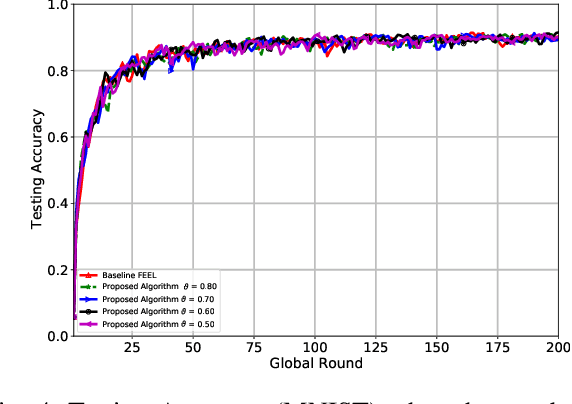

Federated edge learning (FEEL) is a promising distributed learning technique for next-generation wireless networks. FEEL preserves the user's privacy, reduces the communication costs, and exploits the unprecedented capabilities of edge devices to train a shared global model by leveraging a massive amount of data generated at the network edge. However, FEEL might significantly shorten energy-constrained participating devices' lifetime due to the power consumed during the model training round. This paper proposes a novel approach that endeavors to minimize computation and communication energy consumption during FEEL rounds to address this issue. First, we introduce a modified local training algorithm that intelligently selects only the samples that enhance the model's quality based on a predetermined threshold probability. Then, the problem is formulated as joint energy minimization and resource allocation optimization problem to obtain the optimal local computation time and the optimal transmission time that minimize the total energy consumption considering the worker's energy budget, available bandwidth, channel states, beamforming, and local CPU speed. After that, we introduce a tractable solution to the formulated problem that ensures the robustness of FEEL. Our simulation results show that our solution substantially outperforms the baseline FEEL algorithm as it reduces the local consumed energy by up to 79%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge