Theory and Implementation of Process and Temperature Scalable Shape-based CMOS Analog Circuits

Paper and Code

May 11, 2022

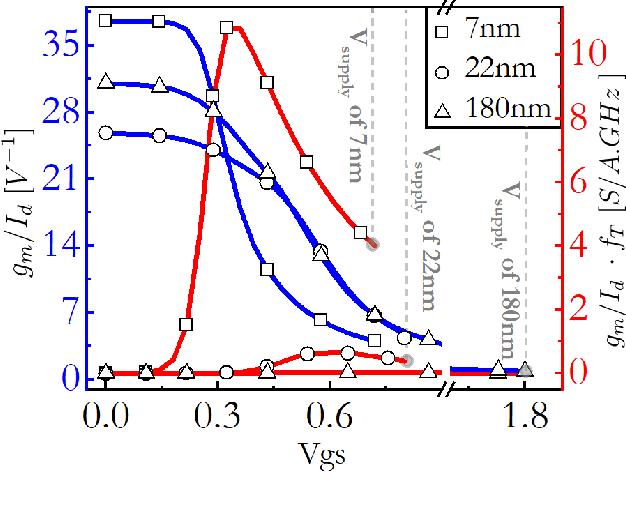

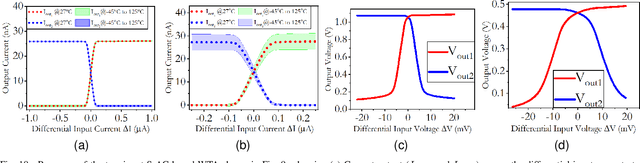

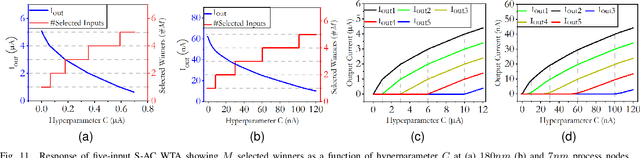

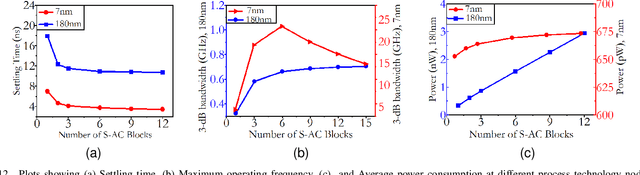

Analog computing is attractive to its digital counterparts due to its potential for achieving high compute density and energy efficiency. However, the device-to-device variability and challenges in porting existing designs to advance process nodes have posed a major hindrance in harnessing the full potential of analog computations for Machine Learning (ML) applications. This work proposes a novel analog computing framework for designing an analog ML processor similar to that of a digital design - where the designs can be scaled and ported to advanced process nodes without architectural changes. At the core of our work lies shape-based analog computing (S-AC). It utilizes device primitives to yield a robust proto-function through which other non-linear shapes can be derived. S-AC paradigm also allows the user to trade off computational precision with silicon circuit area and power. Thus allowing users to build a truly power-efficient and scalable analog architecture where the same synthesized analog circuit can operate across different biasing regimes of transistors and simultaneously scale across process nodes. As a proof of concept, we show the implementation of commonly used mathematical functions for carrying standard ML tasks in both planar CMOS 180nm and FinFET 7nm process nodes. The synthesized Shape-based ML architecture has been demonstrated for its classification accuracy on standard data sets at different process nodes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge