Theoretical Knowledge Graph Reasoning via Ending Anchored Rules

Paper and Code

Dec 15, 2020

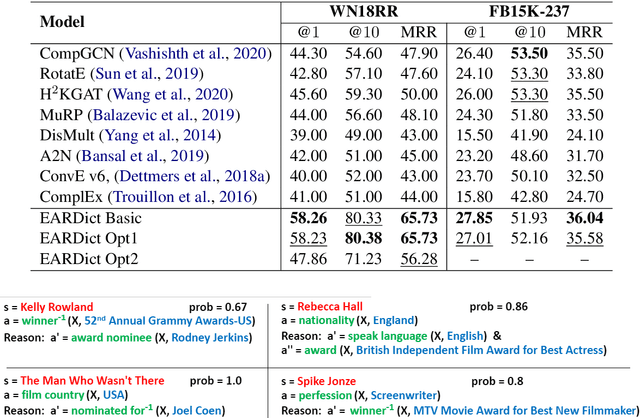

Discovering precise and specific rules from knowledge graphs is regarded as an essential challenge, which can improve the performances of many downstream tasks and even provide new ways to approach some Natural Language Processing research topics. In this paper, we provide a fundamental theory for knowledge graph reasoning based on the ending anchored rules. Our theory provides precise reasons explaining why or why not a triple is correct. Then, we implement our theory by what we call the EARDict model. Results show that our EARDict model significantly outperforms all the benchmark models on two large datasets of knowledge graph completion, including achieving a Hits@10 score of 96.6 percent on WN18RR.

* Comparing to v2, v3 raises the lower bound of the connection set to

be 2, which increases the performances on WN18RR for about 20 percent, and

increases those on FB15K-237 for about 6 percent. People may refer to our

presentation "EARDict_refinement" posted on github.com/ucsd-cmi/eardict for a

detailed comparison between v2 and v3. We also revise our expressions a lot

in v3

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge