The Ridgelet Prior: A Covariance Function Approach to Prior Specification for Bayesian Neural Networks

Paper and Code

Oct 16, 2020

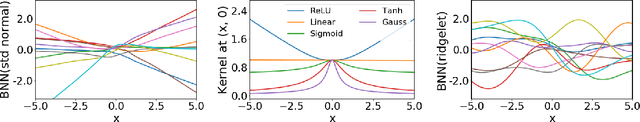

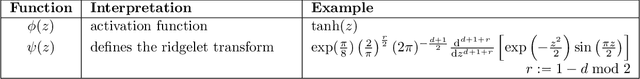

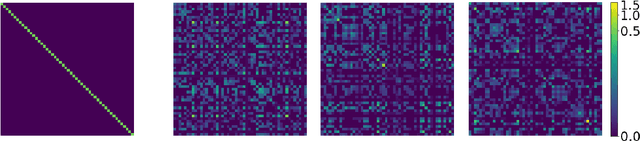

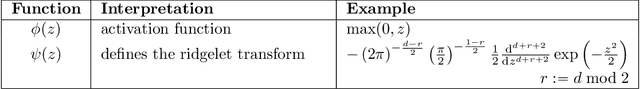

Bayesian neural networks attempt to combine the strong predictive performance of neural networks with formal quantification of uncertainty associated with the predictive output in the Bayesian framework. However, it remains unclear how to endow the parameters of the network with a prior distribution that is meaningful when lifted into the output space of the network. A possible solution is proposed that enables the user to posit an appropriate covariance function for the task at hand. Our approach constructs a prior distribution for the parameters of the network, called a ridgelet prior, that approximates the posited covariance structure in the output space of the network. The approach is rooted in the ridgelet transform and we establish both finite-sample-size error bounds and the consistency of the approximation of the covariance function in a limit where the number of hidden units is increased. Our experimental assessment is limited to a proof-of-concept, where we demonstrate that the ridgelet prior can out-perform an unstructured prior on regression problems for which an informative covariance function can be a priori provided.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge