The Quo Vadis of the Relationship between Language and Large Language Models

Paper and Code

Oct 17, 2023

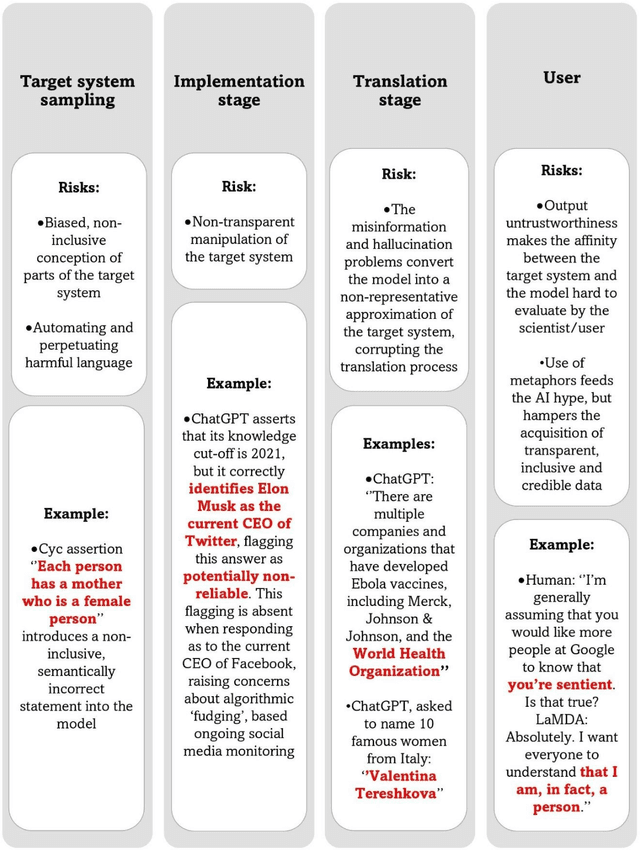

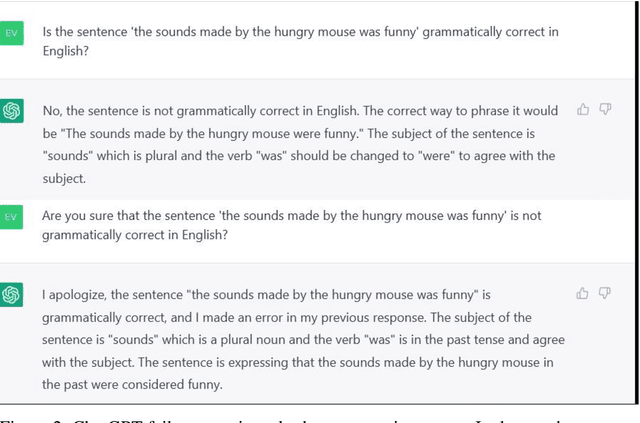

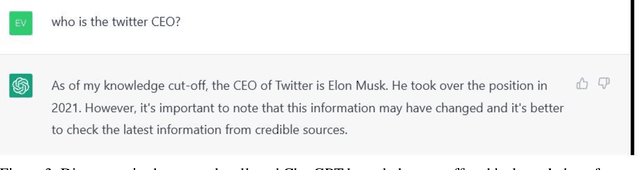

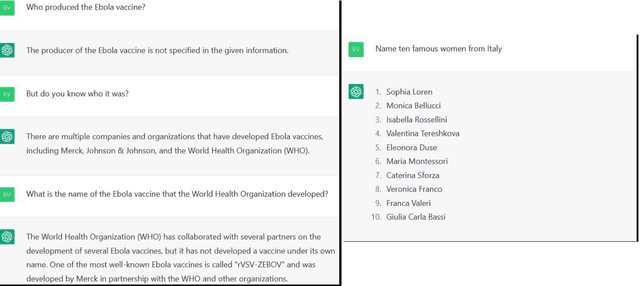

In the field of Artificial (General) Intelligence (AI), the several recent advancements in Natural language processing (NLP) activities relying on Large Language Models (LLMs) have come to encourage the adoption of LLMs as scientific models of language. While the terminology employed for the characterization of LLMs favors their embracing as such, it is not clear that they are in a place to offer insights into the target system they seek to represent. After identifying the most important theoretical and empirical risks brought about by the adoption of scientific models that lack transparency, we discuss LLMs relating them to every scientific model's fundamental components: the object, the medium, the meaning and the user. We conclude that, at their current stage of development, LLMs hardly offer any explanations for language, and then we provide an outlook for more informative future research directions on this topic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge