The Impact of Differential Privacy on Recommendation Accuracy and Popularity Bias

Paper and Code

Jan 15, 2024

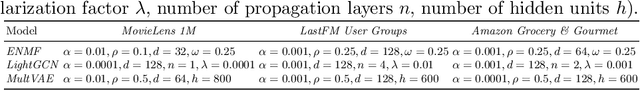

Collaborative filtering-based recommender systems leverage vast amounts of behavioral user data, which poses severe privacy risks. Thus, often, random noise is added to the data to ensure Differential Privacy (DP). However, to date, it is not well understood, in which ways this impacts personalized recommendations. In this work, we study how DP impacts recommendation accuracy and popularity bias, when applied to the training data of state-of-the-art recommendation models. Our findings are three-fold: First, we find that nearly all users' recommendations change when DP is applied. Second, recommendation accuracy drops substantially while recommended item popularity experiences a sharp increase, suggesting that popularity bias worsens. Third, we find that DP exacerbates popularity bias more severely for users who prefer unpopular items than for users that prefer popular items.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge