The Curse of Zero Task Diversity: On the Failure of Transfer Learning to Outperform MAML and their Empirical Equivalence

Paper and Code

Dec 24, 2021

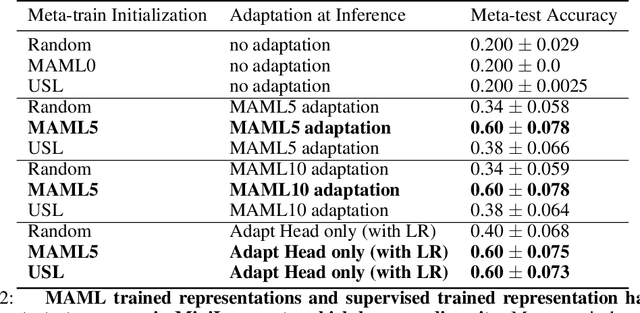

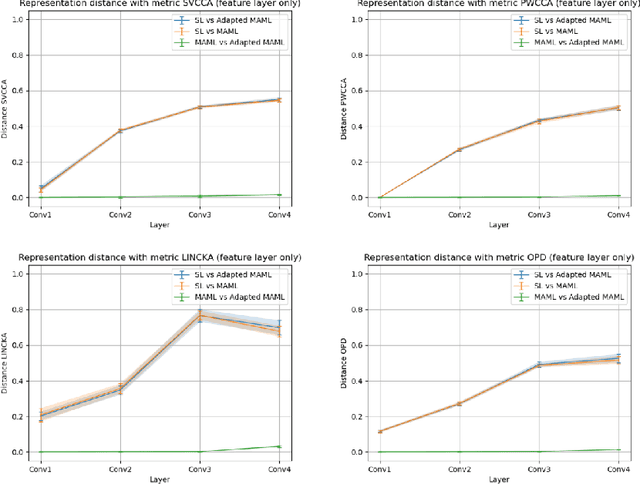

It has been recently observed that a transfer learning solution might be all we needed to solve many few-shot learning benchmarks. This raises important questions about when and how meta-learning algorithms should be deployed. In this paper, we make a first step in clarifying these questions by first formulating a computable metric for a few-shot learning benchmark that we hypothesize is predictive of whether meta-learning solutions will succeed or not. We name this metric the diversity coefficient of a few-shot learning benchmark. Using the diversity coefficient, we show that the MiniImagenet benchmark has zero diversity - according to twenty-four different ways to compute the diversity. We proceed to show that when making a fair comparison between MAML learned solutions to transfer learning, both have identical meta-test accuracy. This suggests that transfer learning fails to outperform MAML - contrary to what previous work suggests. Together, these two facts provide the first test of whether diversity correlates with meta-learning success and therefore show that a diversity coefficient of zero correlates with a high similarity between transfer learning and MAML learned solutions - especially at meta-test time. We therefore conjecture meta-learned solutions have the same meta-test performance as transfer learning when the diversity coefficient is zero.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge