The complexity of quantum support vector machines

Paper and Code

Feb 28, 2022

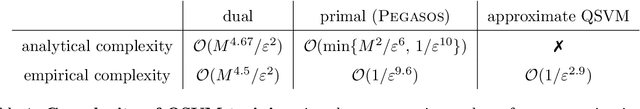

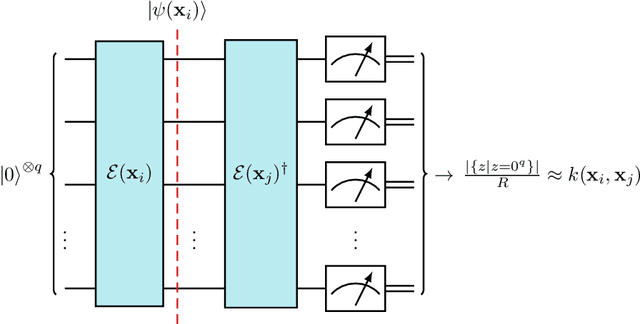

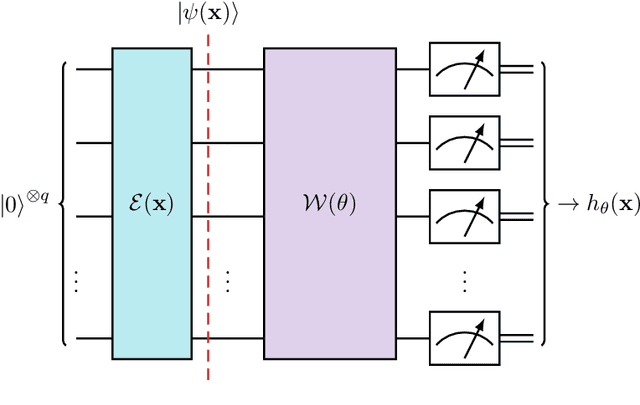

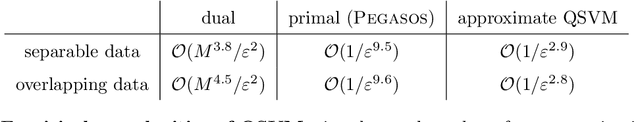

Quantum support vector machines employ quantum circuits to define the kernel function. It has been shown that this approach offers a provable exponential speedup compared to any known classical algorithm for certain data sets. The training of such models corresponds to solving a convex optimization problem either via its primal or dual formulation. Due to the probabilistic nature of quantum mechanics, the training algorithms are affected by statistical uncertainty, which has a major impact on their complexity. We show that the dual problem can be solved in $\mathcal{O}(M^{4.67}/\varepsilon^2)$ quantum circuit evaluations, where $M$ denotes the size of the data set and $\varepsilon$ the solution accuracy. We prove under an empirically motivated assumption that the kernelized primal problem can alternatively be solved in $\mathcal{O}(\min \{ M^2/\varepsilon^6, \, 1/\varepsilon^{10} \})$ evaluations by employing a generalization of a known classical algorithm called Pegasos. Accompanying empirical results demonstrate these analytical complexities to be essentially tight. In addition, we investigate a variational approximation to quantum support vector machines and show that their heuristic training achieves considerably better scaling in our experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge