Teacher-Students Knowledge Distillation for Siamese Trackers

Paper and Code

Jul 24, 2019

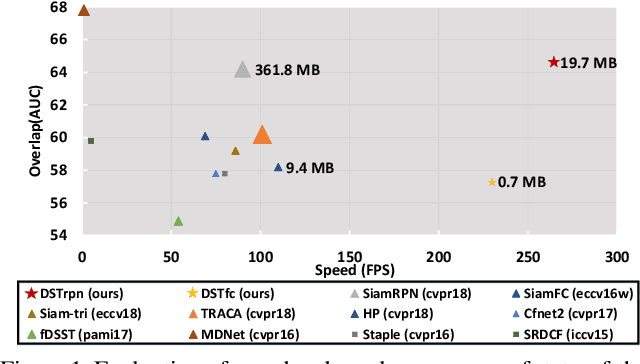

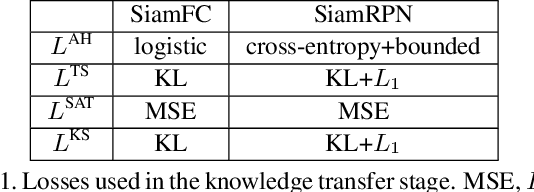

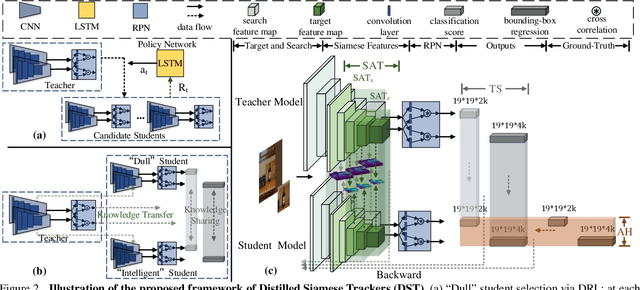

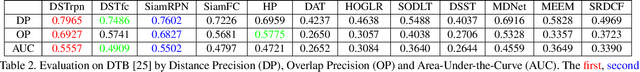

With the development of Siamese network based trackers, a variety of techniques have been fused into this framework for real-time object tracking. However, Siamese trackers suffer from the dilemma between high memory cost and strict constraints on memory budget for practical applications. In this paper, we propose a novel distilled Siamese tracker framework to learn small, fast yet accurate trackers (students), which can capture critical knowledge from large Siamese trackers (teachers) by a teacher-students knowledge distillation model. This model is intuitively inspired by a one teacher vs multi-students learning mechanism, which is the most usual teaching method in the school. In particular, it contains a single teacher-student distillation model and a student-student knowledge sharing mechanism. The first one is designed by a tracking-specific distillation strategy to transfer knowledge from teacher to students. The second one is applied for mutual learning between students to enable more in-depth knowledge understanding. Moreover, to demonstrate its generality and effectiveness, we conduct theoretical analysis and extensive empirical evaluations on two Siamese trackers, on several popular tracking benchmarks. The results show that the distilled trackers achieve compression rates of 13$\times$--18$\times$, while maintaining the same or even slightly improved tracking accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge