TCT: A Cross-supervised Learning Method for Multimodal Sequence Representation

Paper and Code

Oct 23, 2019

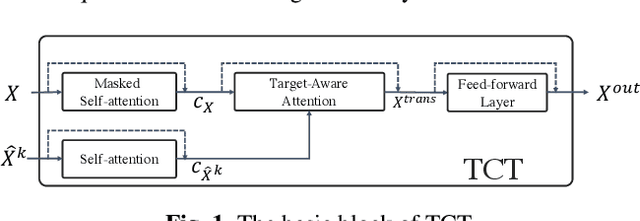

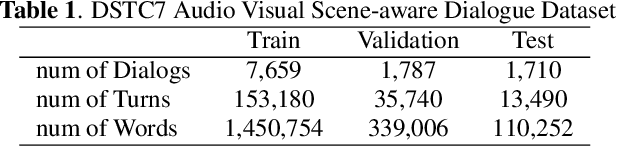

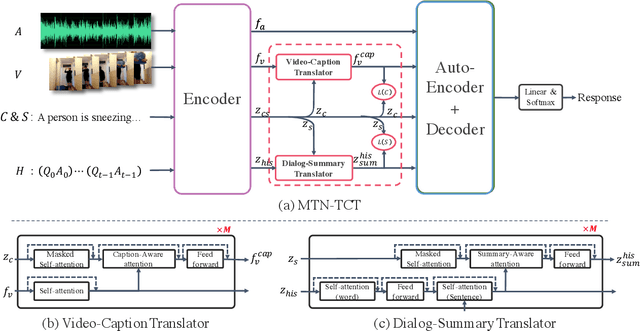

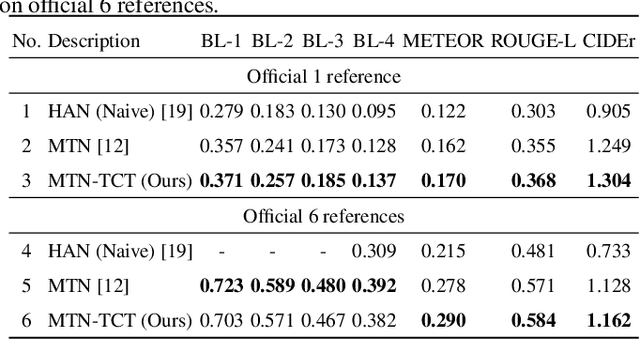

Multimodalities provide promising performance than unimodality in most tasks. However, learning the semantic of the representations from multimodalities efficiently is extremely challenging. To tackle this, we propose the Transformer based Cross-modal Translator (TCT) to learn unimodal sequence representations by translating from other related multimodal sequences on a supervised learning method. Combined TCT with Multimodal Transformer Network (MTN), we evaluate MTN-TCT on the video-grounded dialogue which uses multimodality. The proposed method reports new state-of-the-art performance on video-grounded dialogue which indicates representations learned by TCT are more semantics compared to directly use unimodality.

* submitted to ICASSP 2020

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge