Target Consistency for Domain Adaptation: when Robustness meets Transferability

Paper and Code

Jun 30, 2020

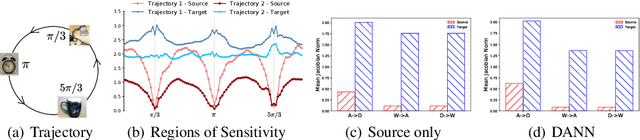

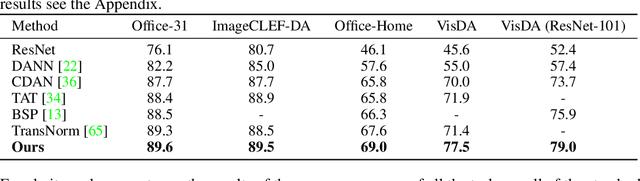

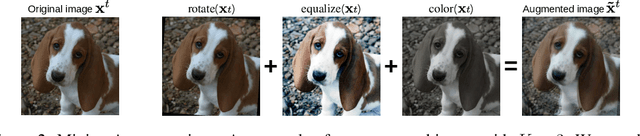

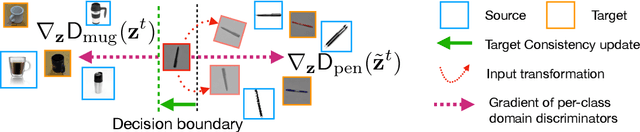

Learning Invariant Representations has been successfully applied for reconciling a source and a target domain for Unsupervised Domain Adaptation. By investigating the robustness of such methods under the prism of the cluster assumption, we bring new evidence that invariance with a low source risk does not guarantee a well-performing target classifier. More precisely, we show that the cluster assumption is violated in the target domain despite being maintained in the source domain, indicating a lack of robustness of the target classifier. To address this problem, we demonstrate the importance of enforcing the cluster assumption in the target domain, named Target Consistency (TC), especially when paired with Class-Level InVariance (CLIV). Our new approach results in a significant improvement, on both image classification and segmentation benchmarks, over state-of-the-art methods based on invariant representations. Importantly, our method is flexible and easy to implement, making it a complementary technique to existing approaches for improving transferability of representations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge