Tackling Dynamics in Federated Incremental Learning with Variational Embedding Rehearsal

Paper and Code

Oct 19, 2021

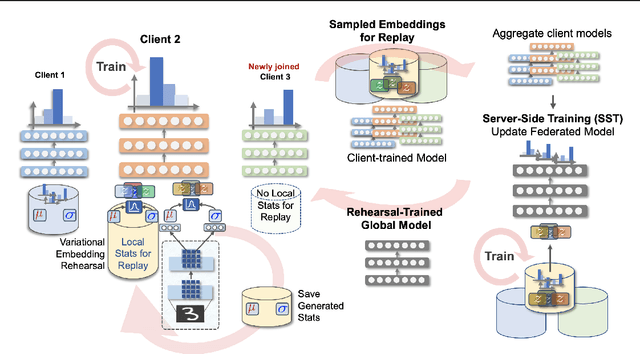

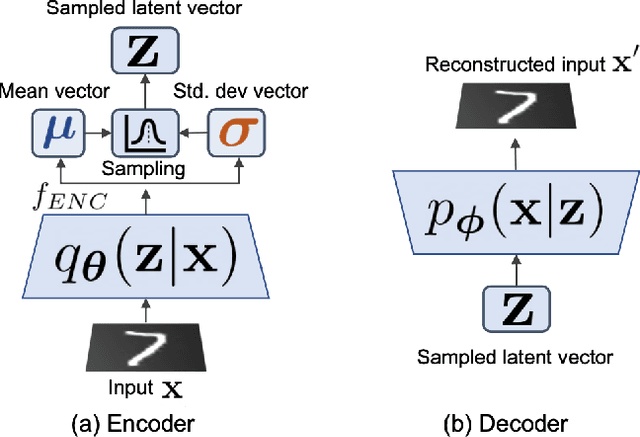

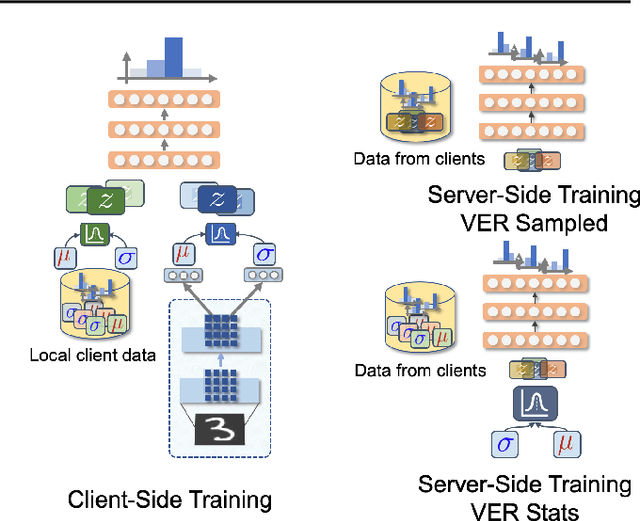

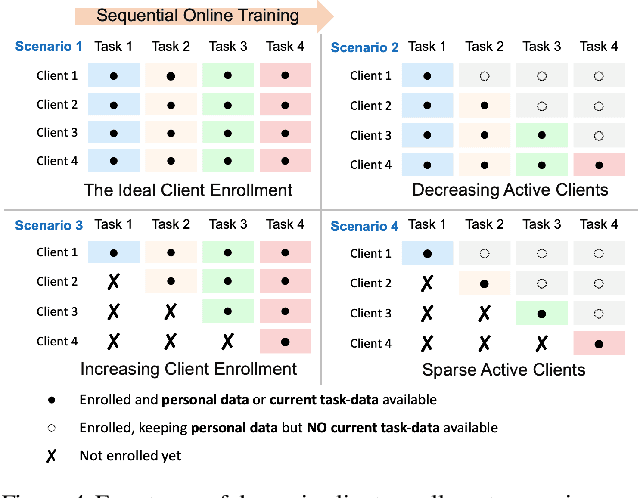

Federated Learning is a fast growing area of ML where the training datasets are extremely distributed, all while dynamically changing over time. Models need to be trained on clients' devices without any guarantees for either homogeneity or stationarity of the local private data. The need for continual training has also risen, due to the ever-increasing production of in-task data. However, pursuing both directions at the same time is challenging, since client data privacy is a major constraint, especially for rehearsal methods. Herein, we propose a novel algorithm to address the incremental learning process in an FL scenario, based on realistic client enrollment scenarios where clients can drop in or out dynamically. We first propose using deep Variational Embeddings that secure the privacy of the client data. Second, we propose a server-side training method that enables a model to rehearse the previously learnt knowledge. Finally, we investigate the performance of federated incremental learning in dynamic client enrollment scenarios. The proposed method shows parity with offline training on domain-incremental learning, addressing challenges in both the dynamic enrollment of clients and the domain shifting of client data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge