Synaptic Modulation using Interspike Intervals Increases Energy Efficiency of Spiking Neural Networks

Paper and Code

Aug 06, 2024

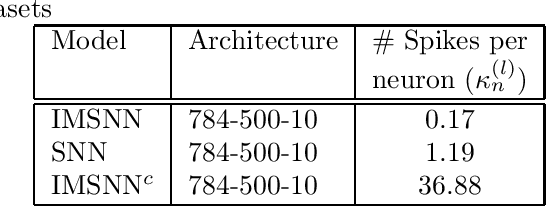

Despite basic differences between Spiking Neural Networks (SNN) and Artificial Neural Networks (ANN), most research on SNNs involve adapting ANN-based methods for SNNs. Pruning (dropping connections) and quantization (reducing precision) are often used to improve energy efficiency of SNNs. These methods are very effective for ANNs whose energy needs are determined by signals transmitted on synapses. However, the event-driven paradigm in SNNs implies that energy is consumed by spikes. In this paper, we propose a new synapse model whose weights are modulated by Interspike Intervals (ISI) i.e. time difference between two spikes. SNNs composed of this synapse model, termed ISI Modulated SNNs (IMSNN), can use gradient descent to estimate how the ISI of a neuron changes after updating its synaptic parameters. A higher ISI implies fewer spikes and vice-versa. The learning algorithm for IMSNNs exploits this information to selectively propagate gradients such that learning is achieved by increasing the ISIs resulting in a network that generates fewer spikes. The performance of IMSNNs with dense and convolutional layers have been evaluated in terms of classification accuracy and the number of spikes using the MNIST and FashionMNIST datasets. The performance comparison with conventional SNNs shows that IMSNNs exhibit upto 90% reduction in the number of spikes while maintaining similar classification accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge