Surrogate modeling for Bayesian optimization beyond a single Gaussian process

Paper and Code

May 27, 2022

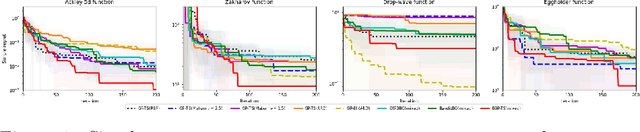

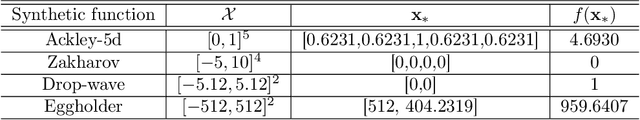

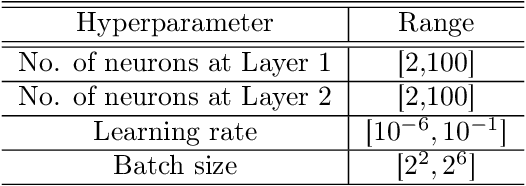

Bayesian optimization (BO) has well-documented merits for optimizing black-box functions with an expensive evaluation cost. Such functions emerge in applications as diverse as hyperparameter tuning, drug discovery, and robotics. BO hinges on a Bayesian surrogate model to sequentially select query points so as to balance exploration with exploitation of the search space. Most existing works rely on a single Gaussian process (GP) based surrogate model, where the kernel function form is typically preselected using domain knowledge. To bypass such a design process, this paper leverages an ensemble (E) of GPs to adaptively select the surrogate model fit on-the-fly, yielding a GP mixture posterior with enhanced expressiveness for the sought function. Acquisition of the next evaluation input using this EGP-based function posterior is then enabled by Thompson sampling (TS) that requires no additional design parameters. To endow function sampling with scalability, random feature-based kernel approximation is leveraged per GP model. The novel EGP-TS readily accommodates parallel operation. To further establish convergence of the proposed EGP-TS to the global optimum, analysis is conducted based on the notion of Bayesian regret for both sequential and parallel settings. Tests on synthetic functions and real-world applications showcase the merits of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge