Surrogate-Assisted Genetic Algorithm for Wrapper Feature Selection

Paper and Code

Nov 17, 2021

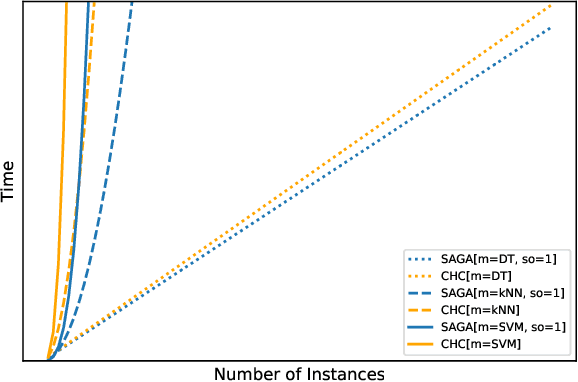

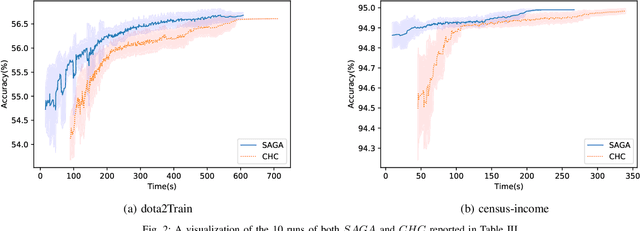

Feature selection is an intractable problem, therefore practical algorithms often trade off the solution accuracy against the computation time. In this paper, we propose a novel multi-stage feature selection framework utilizing multiple levels of approximations, or surrogates. Such a framework allows for using wrapper approaches in a much more computationally efficient way, significantly increasing the quality of feature selection solutions achievable, especially on large datasets. We design and evaluate a Surrogate-Assisted Genetic Algorithm (SAGA) which utilizes this concept to guide the evolutionary search during the early phase of exploration. SAGA only switches to evaluating the original function at the final exploitation phase. We prove that the run-time upper bound of SAGA surrogate-assisted stage is at worse equal to the wrapper GA, and it scales better for induction algorithms of high order of complexity in number of instances. We demonstrate, using 14 datasets from the UCI ML repository, that in practice SAGA significantly reduces the computation time compared to a baseline wrapper Genetic Algorithm (GA), while converging to solutions of significantly higher accuracy. Our experiments show that SAGA can arrive at near-optimal solutions three times faster than a wrapper GA, on average. We also showcase the importance of evolution control approach designed to prevent surrogates from misleading the evolutionary search towards false optima.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge