Supervised classification via minimax probabilistic transformations

Paper and Code

Apr 16, 2019

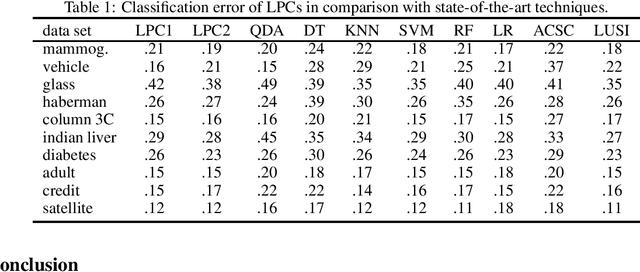

Current leaning techniques for supervised classification consider classification rules in specific families and empirically quantify performance using test data. Selection among families of classification rules, techniques' choices, parameters, etc. is mostly guided by an experimentation stage in which the performance of different options is estimated by computing the corresponding empirical risks over a set of test examples. This empirically-based system design is inefficient and not robust since it requires to test a possibly large pool of choices and it relies in the reliability of the empirical performance quantification. This paper presents classification algorithms that we call linear probabilistic machines (LPMs) that consider unconstrained classification rules and obtain tight performance guaranties during learning. LPMs utilize polyhedral uncertainty sets that contain the actual probability distribution with a tunable confidence and are defined by a functional that assigns features-label pairs to real numbers. LPMs achieve smaller worst-case risk against such uncertainty sets than any classification rule and their performance can be efficiently upper and lower bounded without testing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge