Subsampled Rényi Differential Privacy and Analytical Moments Accountant

Paper and Code

Jul 31, 2018

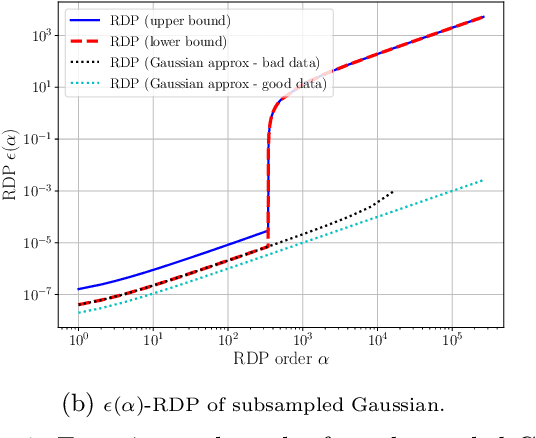

We study the problem of subsampling in differential privacy (DP), a question that is the centerpiece behind many successful differentially private machine learning algorithms. Specifically, we provide a tight upper bound on the R\'enyi Differential Privacy (RDP) (Mironov, 2017) parameters for algorithms that: (1) subsample the dataset, and then (2) apply a randomized mechanism M to the subsample, in terms of the RDP parameters of M and the subsampling probability parameter. This result generalizes the classic subsampling-based "privacy amplification" property of $(\epsilon,\delta)$-differential privacy that applies to only one fixed pair of $(\epsilon,\delta)$, to a stronger version that exploits properties of each specific randomized algorithm and satisfies an entire family of $(\epsilon(\delta),\delta)$-differential privacy for all $\delta\in [0,1]$. Our experiments confirm the advantage of using our techniques over keeping track of $(\epsilon,\delta)$ directly, especially in the setting where we need to compose many rounds of data access.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge