Submatrix localization via message passing

Paper and Code

Oct 30, 2015

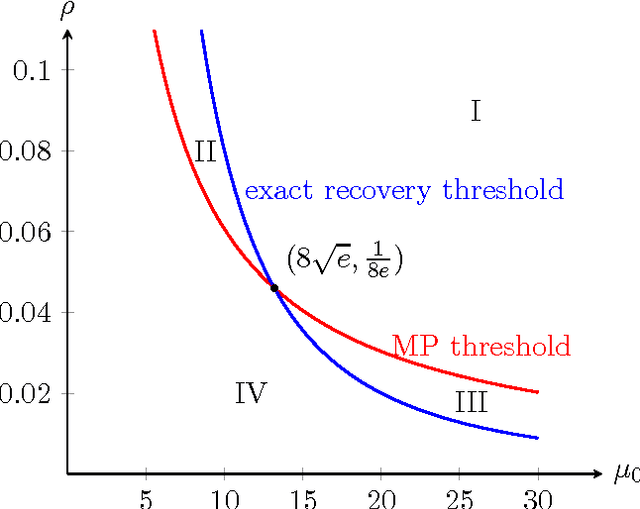

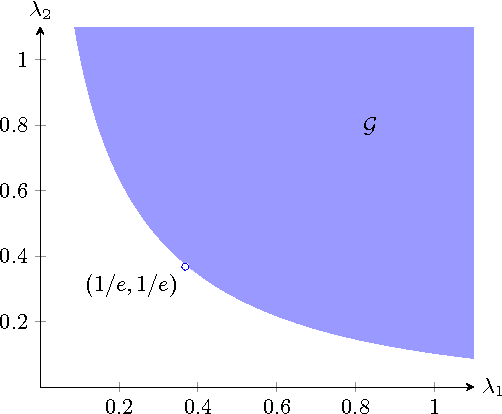

The principal submatrix localization problem deals with recovering a $K\times K$ principal submatrix of elevated mean $\mu$ in a large $n\times n$ symmetric matrix subject to additive standard Gaussian noise. This problem serves as a prototypical example for community detection, in which the community corresponds to the support of the submatrix. The main result of this paper is that in the regime $\Omega(\sqrt{n}) \leq K \leq o(n)$, the support of the submatrix can be weakly recovered (with $o(K)$ misclassification errors on average) by an optimized message passing algorithm if $\lambda = \mu^2K^2/n$, the signal-to-noise ratio, exceeds $1/e$. This extends a result by Deshpande and Montanari previously obtained for $K=\Theta(\sqrt{n}).$ In addition, the algorithm can be extended to provide exact recovery whenever information-theoretically possible and achieve the information limit of exact recovery as long as $K \geq \frac{n}{\log n} (\frac{1}{8e} + o(1))$. The total running time of the algorithm is $O(n^2\log n)$. Another version of the submatrix localization problem, known as noisy biclustering, aims to recover a $K_1\times K_2$ submatrix of elevated mean $\mu$ in a large $n_1\times n_2$ Gaussian matrix. The optimized message passing algorithm and its analysis are adapted to the bicluster problem assuming $\Omega(\sqrt{n_i}) \leq K_i \leq o(n_i)$ and $K_1\asymp K_2.$ A sharp information-theoretic condition for the weak recovery of both clusters is also identified.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge