SUB-Depth: Self-distillation and Uncertainty Boosting Self-supervised Monocular Depth Estimation

Paper and Code

Nov 19, 2021

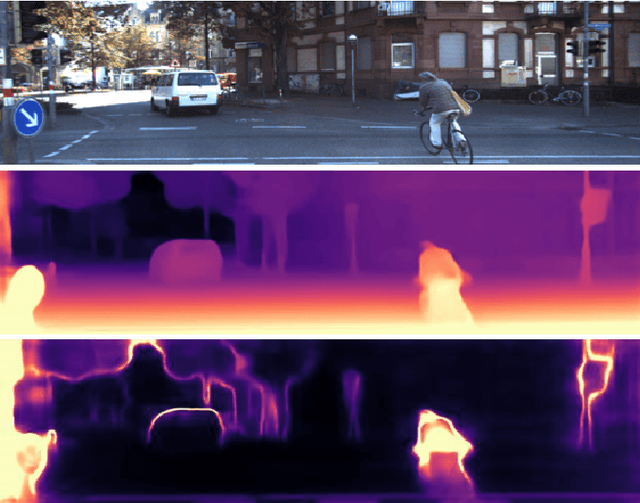

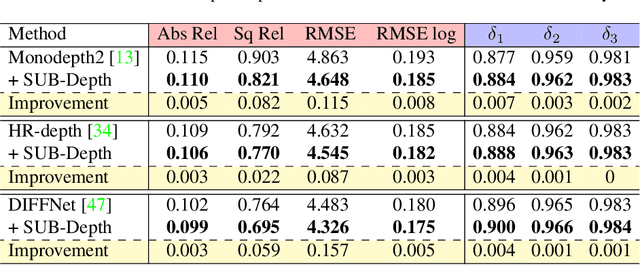

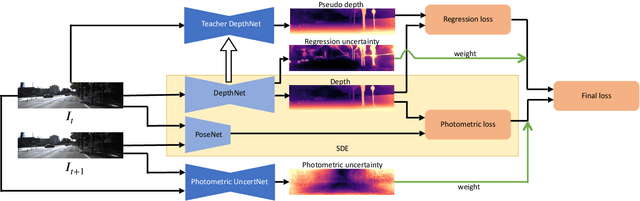

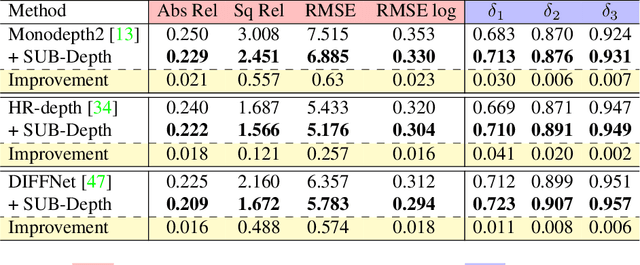

We propose SUB-Depth, a universal multi-task training framework for self-supervised monocular depth estimation (SDE). Depth models trained with SUB-Depth outperform the same models trained in a standard single-task SDE framework. By introducing an additional self-distillation task into a standard SDE training framework, SUB-Depth trains a depth network, not only to predict the depth map for an image reconstruction task, but also to distill knowledge from a trained teacher network with unlabelled data. To take advantage of this multi-task setting, we propose homoscedastic uncertainty formulations for each task to penalize areas likely to be affected by teacher network noise, or violate SDE assumptions. We present extensive evaluations on KITTI to demonstrate the improvements achieved by training a range of existing networks using the proposed framework, and we achieve state-of-the-art performance on this task. Additionally, SUB-Depth enables models to estimate uncertainty on depth output.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge