Strategyproof Peer Selection using Randomization, Partitioning, and Apportionment

Paper and Code

Sep 29, 2017

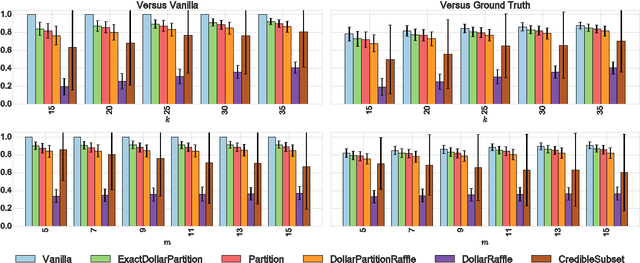

Peer review, evaluation, and selection is a fundamental aspect of modern science. Funding bodies the world over employ experts to review and select the best proposals of those submitted for funding. The problem of peer selection, however, is much more general: a professional society may want to give a subset of its members awards based on the opinions of all members; an instructor for a MOOC or online course may want to crowdsource grading; or a marketing company may select ideas from group brainstorming sessions based on peer evaluation. We make three fundamental contributions to the study of procedures or mechanisms for peer selection, a specific type of group decision-making problem, studied in computer science, economics, and political science. First, we propose a novel mechanism that is strategyproof, i.e., agents cannot benefit by reporting insincere valuations. Second, we demonstrate the effectiveness of our mechanism by a comprehensive simulation-based comparison with a suite of mechanisms found in the literature. Finally, our mechanism employs a randomized rounding technique that is of independent interest, as it solves the apportionment problem that arises in various settings where discrete resources such as parliamentary representation slots need to be divided proportionally.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge