Strategizing Equitable Transit Evacuations: A Data-Driven Reinforcement Learning Approach

Paper and Code

Dec 08, 2024

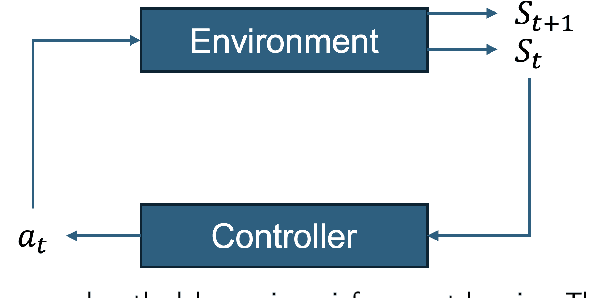

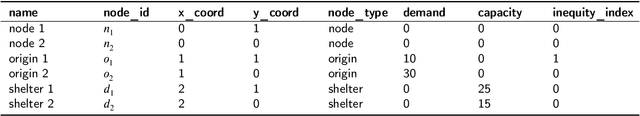

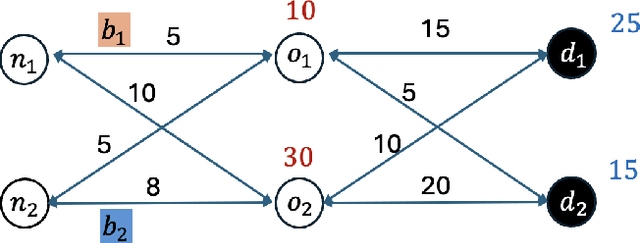

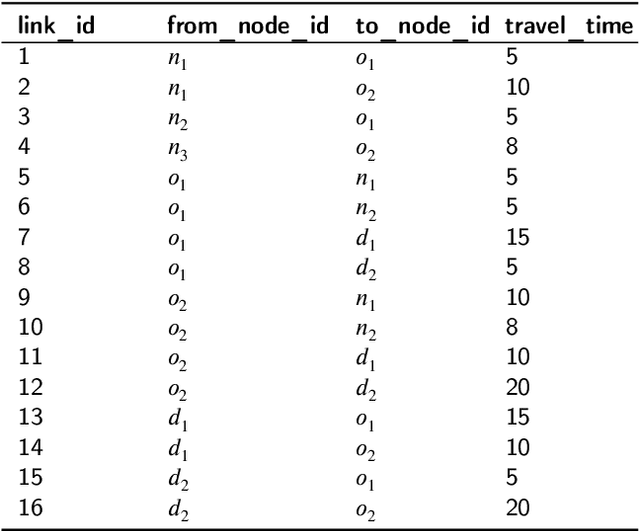

As natural disasters become increasingly frequent, the need for efficient and equitable evacuation planning has become more critical. This paper proposes a data-driven, reinforcement learning-based framework to optimize bus-based evacuations with an emphasis on improving both efficiency and equity. We model the evacuation problem as a Markov Decision Process solved by reinforcement learning, using real-time transit data from General Transit Feed Specification and transportation networks extracted from OpenStreetMap. The reinforcement learning agent dynamically reroutes buses from their scheduled location to minimize total passengers' evacuation time while prioritizing equity-priority communities. Simulations on the San Francisco Bay Area transportation network indicate that the proposed framework achieves significant improvements in both evacuation efficiency and equitable service distribution compared to traditional rule-based and random strategies. These results highlight the potential of reinforcement learning to enhance system performance and urban resilience during emergency evacuations, offering a scalable solution for real-world applications in intelligent transportation systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge