Stochastic sparse adversarial attacks

Paper and Code

Nov 24, 2020

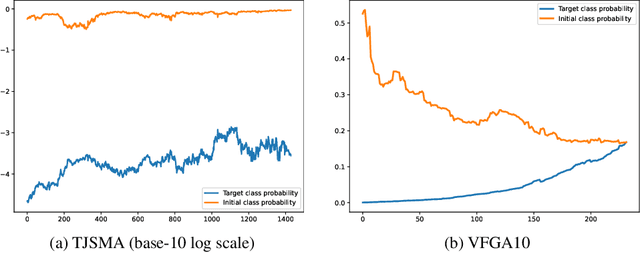

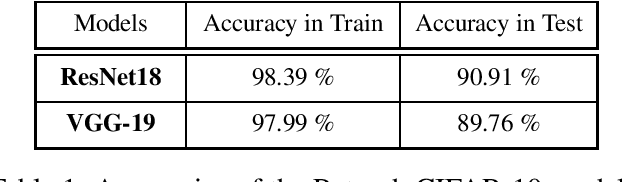

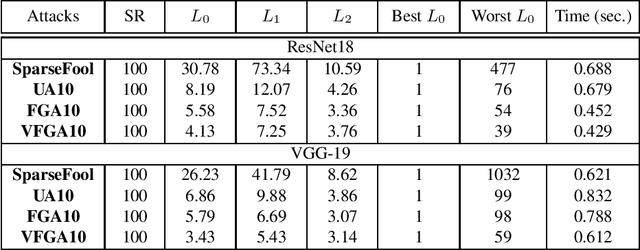

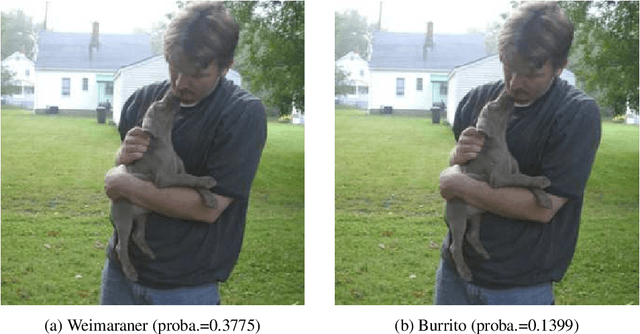

Adversarial attacks of neural network classifiers (NNC) and the use of random noises in these methods have stimulated a large number of works in recent years. However, despite all the previous investigations, existing approaches that rely on random noises to fool NNC have fallen far short of the-state-of-the-art adversarial methods performances. In this paper, we fill this gap by introducing stochastic sparse adversarial attacks (SSAA), standing as simple, fast and purely noise-based targeted and untargeted attacks of NNC. SSAA offer new examples of sparse (or $L_0$) attacks for which only few methods have been proposed previously. These attacks are devised by exploiting a small-time expansion idea widely used for Markov processes. Experiments on small and large datasets (CIFAR-10 and ImageNet) illustrate several advantages of SSAA in comparison with the-state-of-the-art methods. For instance, in the untargeted case, our method called voting folded Gaussian attack (VFGA) scales efficiently to ImageNet and achieves a significantly lower $L_0$ score than SparseFool (up to $\frac{1}{14}$ lower) while being faster. In the targeted setting, VFGA achives appealing results on ImageNet and is significantly much faster than Carlini-Wagner $L_0$ attack.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge