Stochastic Graph Recurrent Neural Network

Paper and Code

Sep 01, 2020

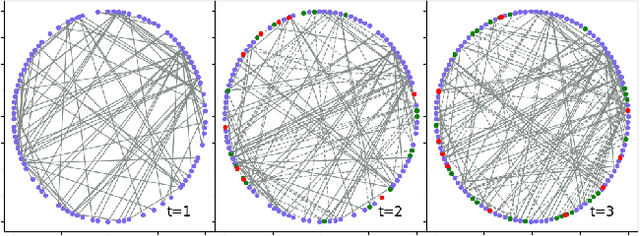

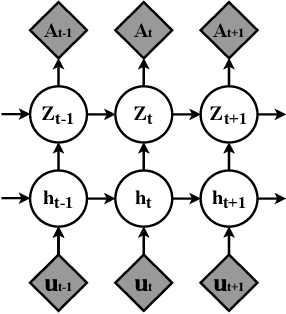

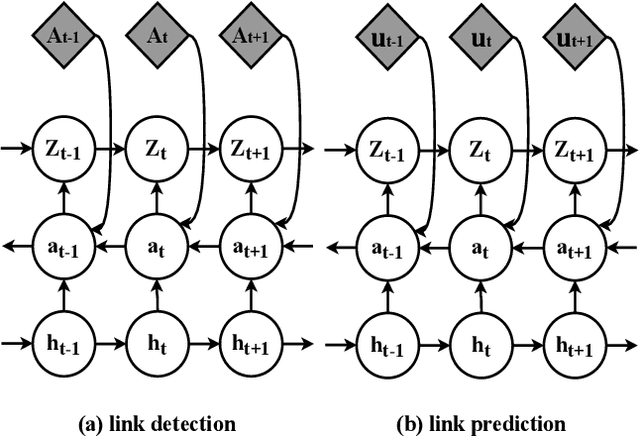

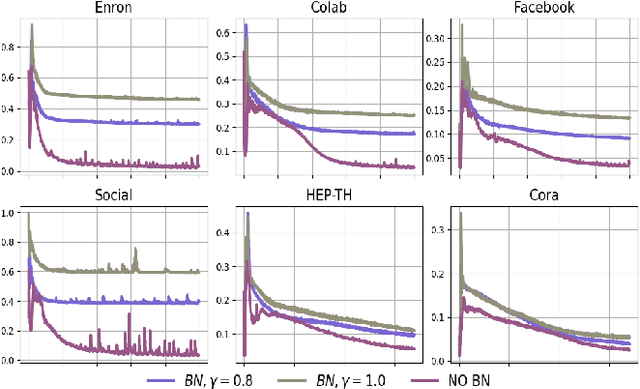

Representation learning over graph structure data has been widely studied due to its wide application prospects. However, previous methods mainly focus on static graphs while many real-world graphs evolve over time. Modeling such evolution is important for predicting properties of unseen networks. To resolve this challenge, we propose SGRNN, a novel neural architecture that applies stochastic latent variables to simultaneously capture the evolution in node attributes and topology. Specifically, deterministic states are separated from stochastic states in the iterative process to suppress mutual interference. With semi-implicit variational inference integrated to SGRNN, a non-Gaussian variational distribution is proposed to help further improve the performance. In addition, to alleviate KL-vanishing problem in SGRNN, a simple and interpretable structure is proposed based on the lower bound of KL-divergence. Extensive experiments on real-world datasets demonstrate the effectiveness of the proposed model. Code is available at https://github.com/StochasticGRNN/SGRNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge